Preface

本书并不是关于如何正确并且优雅的书写代码的,因为我假设你已经了解如何做到那些了。尽管本书有关于跟踪诊断,以便帮助你找到程序瓶颈和不必要的资源使用,以及性能调优的章节,但是,本书也不是真正的关于诊断和性能调优的书。

这两章是全书的最后部分,整本书都在为这些章节做准备。但是本书的真正目标是把所有的信息和细节展示出来,以便你真正的理解你的Erlang应用的性能表现。

关于本书

~~~~~

任何人期望:调整 Erlang 安装,了解如何调试 VM 崩溃,改进 Erlang 应用性能,深入理解 Erlang 如何工作,学习如何构建你自己的运行时环境

如果你想要调试VM,扩展 VM,调整性能,请跳到最后一章,但是想要真正理解那一章,你需要阅读这本书。

阅读方法

Erlang RunTime System (ERTS) 是一个有许多组件相互依赖的复杂系统。他使用了非常易于移植的方法编码,以便能够在从电脑棒到上TB内存的多核计算机上运行。为了能够为你的应用优化性能,你就不能只了解你的应用本身,同时需要深刻理解ERTS。

有了 ERTS 如何运行的知识,你就能够理解你的应用在 ERTS 之上运行的行为模式,也可以修补你应用的性能问题。在本书的第二部分,我们将深入介绍如何成功的运行,监控和扩展你的 ERTS 应用。

本书的读者不必是一位 Erlang 程序员,但需要对 Erlang 是什么有基本了解,接下来这段内容将给你一些关于 Erlang 的背景信息。

Erlang

本节中,我们将一起了解一些基础的 Erlang 概念,这对理解本书至关重要。

Erlang 被以它的发明人 Joe Armstrong 为代表的人称为一门面向并发的语言。并发在 Erlang 语言中处于核心地位,为了能够理解 Erlang 系统如何工作,你需要理解 Erlang 的并发模型。

首先,我们需要区分 “并发” 和 “并行”。本书中,“并发” 的概念是指2个或者更多的进程 能 相互独立的执行,这可以是先执行一个进程然后和其余进程交织执行,或者它们并行执行。提到 “并行” 执行时,我们是指多个进程在同一时刻使用多个物理执行单元执行。“并行”可能在不同层面上实现,例如通过单核的执行流水线的多个执行单元,通过CPU的多核芯,通过单一计算机的多个CPU,或者通过多个计算机实现。

Erlang 通过进程实现并发。从概念上讲,Erlang 的进程与大多数的操作系统进程类似,它们并行执行并且通过信号通信。但是实践上来说,Erlang 进程比绝大多数的操作系统进程都轻量,这是一个巨大的差异。在一些并发编程语言中,与 Erlang 进程对等的概念是 agents 。

Erlang 通过在 Erlang 虚拟机(BEAM)中交织的执行进程来达到并发的目的。在多核处理器上,BEAM 也可以通过运行在每个核心上运行一个调度器,在每个调度器上运行一个 Erlang 进程来实现并行,Erlang 系统的设计人员可以将系统分布在不同计算机上来达成更进一步的并行。

一个典型的 Erlang 系统(在 Erlang 中内置服务器或者服务)包含一定数量 Erlang 应用(application),对应于磁盘上的一个目录。每一个应用由若干 Erlang 模块(module)组成,模块对应于这个目录中的一些文件。每个模块包含若干函数(function),每个函数由若干表达式(expression)组成。

Erlang 是一个函数式语言,它没有语句,只有表达式。Erlang 表达式能被组合成 Erlang 函数。函数接受若干参数并且返回一个值。在 Erlang Code Examples 中,我们可以看到若干 Erlang 表达式和函数。

%% Some Erlang expressions:

true.

1+1.

if (X > Y) -> X; true -> Y end.

%% An Erlang function:

max(X, Y) ->

if (X > Y) -> X;

true -> Y

end.Erlang VM 实现了许多 Erlang 内建函数 (built in functions 或 BIFs),这样做有效率方面的原因,例如 lists:append 的实现(它也可以在 Erlang 实现),同时也有在实现一些底层功能时, Erlang 本身较难实现的原因,例如 list_to_atom。

从 Erlang/OTP R13B03 版本开始,你也可以使用 C 语言和 Native Implemented Functions (NIF) 接口来实现自己的函数实现。

致谢

首先我要感谢 Ericsson OTP Team,感谢他们维护 Erlang 和 Erlang 运行时,并且耐心的回复我的提问。特别感谢Kenneth Lundin, Björn Gustavsson, Lukas Larsson, Rickard Green 和 Raimo Niskanen。

同时感谢本书的主要贡献者 Yoshihiro Tanaka, Roberto Aloi 和 Dmytro Lytovchenko,感谢 HappiHacking 和 TubiTV 对本书的赞助。

最后,感谢每一位编辑和修正本书的贡献者。

Yoshihiro Tanaka Roberto Aloi Dmytro Lytovchenko Anthony Molinaro Alexandre Rodrigues Yoshihiro TANAKA hitdavid Ken Causey Lukas Larsson Kim Shrier David Trevor Brown Andrea Leopardi Anton N Ryabkov Greg Baraghimian Marc van Woerkom Michał Piotrowski Ramkumar Rajagopalan Yves Müller techgaun Juan Facorro Cameron Price Kyle Baker Buddhika Chathuranga Luke Imhoff fred Alex Jiao Milton Inostroza PlatinumThinker yoshi Benjamin Tan Wei Hao Alex Fu Yago Riveiro Antonio Nikishaev Amir Moulavi Eric Yu Erick Dennis Davide Bettio tomdos Jan Lehnardt Chris Yunker

~~~~~

I: 理解 ERTS

1. Erlang 运行时系统介绍

Erlang 运行时系统(ERTS) 是一个有许多组件相互依赖的复杂系统。他使用了非常易于移植的方法编码,以便能够在从电脑棒到上TB内存的多核计算机上运行。为了能够为你的应用优化性能,你就不能只了解你的应用本身,同时需要深刻理解ERTS。

1.1. ERTS 和 Erlang 运行时系统

任何 Erlang 运行时系统 和 Erlang 运行时的特定实现系统有一点区别。由爱立信开发维护的 Erlang/OTP 是 Erlang 和 Erlang 运行时系统事实上的标准实现。在本书中,我将参考这个实现为 ERTS,将 Erlang RunTime System 中 T 字母大写 (See Section 1.3 for a definition of OTP)。

Erlang 运行时系统或者 Erlang 虚拟机并没有一个官方定义。你可以想象这样一个理想的柏拉图式系统看起来就像是ERTS,并且移除了所有特定实现细节。不幸的是,这是一个循环定义,因为你需要了解通用定义以便能够鉴别一个特定实现细节。在Erlang 的世界里,我们通常比较务实而不去担心这些。

我们将尝试使用术语 Erlang Runtime System 来指代 Erlang 运行时系统的一般的想法。反之,由 Ericsson 开发维护的特定实现被我们称为 Erlang 运行时系统或简称 ERTS.

Note 本书主要关于 ERTS,很小部分与通用 Erlang Runtime System 相关。你可以假设我们一直在基于 Ericsson 的实现讨论问题,除非我们明确声明我们在讨论通用原则。

1.2. 如何阅读本书

在本书的 Part II 部分,我们将关注如何为你的应用调整运行时系统,以及分析和调试你的应用和运行时系统。为了真正了解如何如何调整系统,你也需要了解系统。在本书的 Part I 部分,你讲深入理解运行时系统的工作原理。

在接下来 Part I 的章节中,我们将深入系统的各个组件。即使你并没有对全部组件有全面的理解,只要基本清楚每个组件是什么,也能够顺利阅读这些章节。剩余的介绍章节将向你介绍足够的基础信息和词汇术语,是你能够随意在这些章节之间切换阅读。

如果你有充裕时间,建议首次阅读按照顺序进行。有关 Erlang 和 ERTS 的词汇术语都在它们首次出现时解释。这样就可以在对某个特定组件有疑问时,使用 Part I 作为参考性的后续反复阅读。

1.3. ERTS

此处我们将对 ERTS 的主要组件以及一些词汇有一个概览,并在后续章节做更细节的描述。

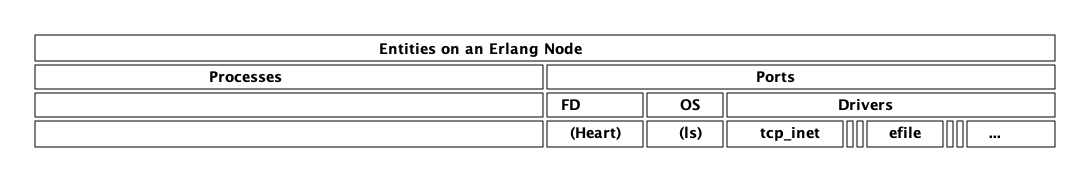

1.3.1. Erlang 节点 (ERTS)

当你启动一个 Elixir / Erlang 应用或者系统,实际上你启动的是一个 Erlang 节点。这个节点运行了 ERTS 以及虚拟机 BEAM(或者也可能是其他的 Erlang 实现(参见 Section 1.4))

你的应用代码在 Erlang 节点上运行,节点的各层也同时对你的应用性能表现产生影响。我们来看一下组成节点的层次栈。这将帮你理解将你的系统运行在不同环境的选项。

使用OO的术语,可以说一个 Erlang 节点就是一个 Erlang 运行时系统类对象。在 Java 世界,等价的概念是 JVM 实例。

所有的 Elixir / Erlang 代码执行都在节点中完成。每个 Erlang 节点运行在一个操作系统进程中,在同一台计算机中可以同时运行多个 Erlang 节点。

根据 Erlang OTP 文档,一个节点实际上是一个命名的执行运行时系统。这样说来,如果你启动了 Elixir,但并没有通过命令行的以下开关来指定节点名字 --name NAME@HOST 或 --sname NAME (在 Erlang 运行时中是 -name 和 -sname ),你会启动一个运行时,但是不能叫节点。此时,函数 Node.alive? (在 Erlang 中为 is_alive()) 返回 false。

$ iex

Erlang/OTP 19 [erts-8.1] [source-0567896] [64-bit] [smp:4:4]

[async-threads:10] [hipe] [kernel-poll:false]

Interactive Elixir (1.4.0) - press Ctrl+C to exit (type h() ENTER for help)

iex(1)> Node.alive?

false

iex(2)>

运行时系统 这个术语的使用并不严格。即使你并没有命名一个节点,也可以取得它的名字。在 Elixir 中,使用`Node.list` 参数 :this, 在 Erlang 中调用 `nodes(this).`即可:

iex(2)> Node.list :this [:nonode@nohost] iex(3)>

本书中,我们将使用术语 节点 来指代任何运行中的运行时实例,而不论它是否被命名。

1.3.2. 执行环境中的分层

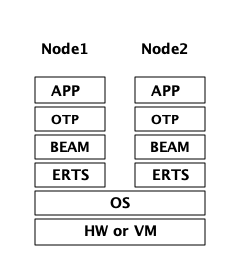

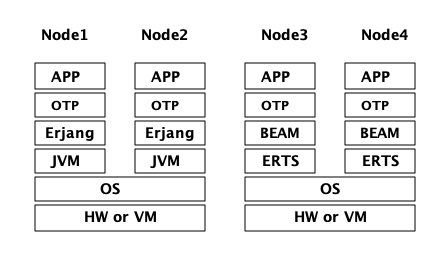

你的程序(应用)是在一个或者多个节点上运行的,它的性能不只取决于你的应用程序代码,同时取决于在 ERTS 栈 (ERTS stack)中,你应用以下的各层。图 Figure 1 中,你可以看到同一台计算机运行2个 Erlang 节点时的 ERTS 栈。

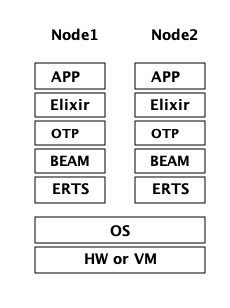

如果你用 Elixir,栈中还会有其他的层次。

我们来观察栈中各层,看你如何为应用程序调优各层。

栈的最底部是程序运行依赖的硬件。改善你应用程序运行性能的最简单的方法是使用更好的硬件。如果因为经济、物理条件约束或者处于对环境问题的担忧等原因阻碍你升级硬件,你可能需要开始探索栈中的更高层次。

选择硬件的2个最主要考量是:它是否是多核系统,它是32位系统还是64位系统。计算机是否多核以及它是32/64位系统决定了你能够使用何种 ERTS 版本。

向上第二层是操作系统层。ERTS 能够在大多数的 Windows 和 包含 Linux, VxWorks, FreeBSD, Solaris, 以及 Mac OS X 的 POSIX “兼容” 系统上运行。如今,大部分的 ERTS 开发工作都是在 Linux 和 OS X 上完成的,所以你可以在这些平台上 ERTS 会有最佳的性能表现。Ericsson 一直以来在许多内部项目中使用 Solaris 平台,多年以来 ERTS 在 Solaris 上一直被调优。视你的使用场景,你也可能在 Solaris 上获得最佳性能。操作系统的选型往往被性能需求之外的因素约束。如果你在构建一个嵌入式应用,你可能需要选择 Raspbian (译注:树莓派系统)或者 VxWork,如果你在构建一些面向终端用户或者客户端的应用,你可能必须使用 Windows。ERTS 的 Windows 版本目前从性能和维护等方面来看,可能并不是最佳的选择,因为它不是最高优先级工作。如果你想使用一个64位版本的 ERTS ,你必须同时选择64位硬件和64位操作系统。本书并不会涉及到很多特定操作系统相关的问题,绝大多数例子假设你是在 Linux 系统上运行。

向上第三层是 Erlang 运行时系统,或者说是 ERTS 层。本层和向上第四层 — Erlang 虚拟机(BEAM)是本书的主要内容。

向上第五层 OTP 提供了 Erlang 标准库支持。OTP的原始含义是 “开放电信平台”(Open Telecom Platform),它包含了若干位构造类似电信交换等鲁棒的应用而提供构建模块的库(例如 supervisor, gen_server and gen_tcp)早期,这些随 ERTS 发布的其他标准库和 OTP 的含义是混杂的。现如今,大多数人将 OTP 和 Erlang 连用为 "Erlang/OTP" 指代 ERTS 以及由 Ericsson 发布的所有 Erlang 库。了解这些标准库并且清楚何时、如何使用它们可以极大地提高应用程序的性能。本书将不涉及任何关于标准库和OTP的细节,涉及这些方面书籍有很多。

如果你运行 Elixir 程序,第6层提供了 Elixir 环境和 Elixir 库。

最后,向上数第7层是你的应用程序以及其中使用的第三方库。应用层可以使用底层提供的所有功能。除了升级硬件,这也是你最容易实现应用性能优化的地方。在 Chapter 18 中介绍了一些诊断优化应用程序的提示和工具。在 Chapter 19 一章中,我们将了解如何找到应用崩溃的原因以及如何查找应用 bug。

有关如何构建运行 Erlang 节点的信息,请参见 Appendix A ,然后通过本书其余部分学习 Erlang 节点的组件知识。

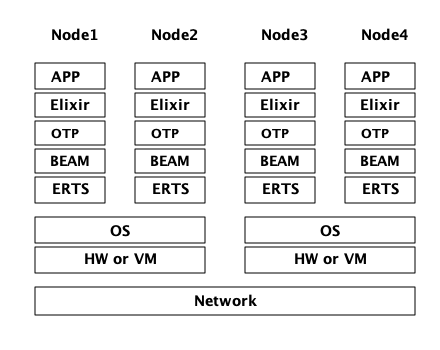

1.3.3. 分布式

Erlang 语言设计者的一个关键洞见是:为了构造一个可以 24小时 * 7天 工作的系统,你需要能够处理硬件失败。所以你需要至少将你的系统部署在2台以上的物理机器上。在每台机器上启动 Erlang 节点后,节点之间互相连接,跨节点的进程可以相互通信,就好像它们运行在同一个节点一样。

1.3.4. Erlang 编译器

Erlang 编译器负责将 Erlang 源代码从 .erl 文件编译为 BEAM 虚拟机代码。编译器本身就是使用 Erlang 编写的,它将自身编译为 BEAM 码,通常在运行的 Erlang 节点可用。为了引导运行时系统,包含编译器在内的数个预先编译好的 BEAM 文件都被放置在 bootstrap 目录。

有关编译器的更多信息可以参考 Chapter 2。

1.3.5. Erlang 虚拟机: BEAM

类似 JVM 是用来执行Java 代码的虚拟机一样,BEAM 是用来执行 Erlang 代码的虚拟机。BEAM 运行在 Erlang 节点上。

就像 ERTS 是 Erlang 运行时系统的更通用概念实现一样, BEAM 是 Erlang 虚拟机(EVM) 的一个通用实现。虽然没有对 EVM 组成结构的定义,但是 BEAM 的指令实际上分2层,分别是通用指令和特定指令。通用指令集可以看作是 EVM 的蓝图。

1.3.6. 进程

一个 Erlang 进程基本上与操作系统进程一样工作。每个进程拥有它自己的内存(mailbox, heap 和 stack)和带有进程信息的进程控制块(process control block , PCB)

所有的 Erlang 代码执行均在进程上下文中完成。一个 Erlang 节点可以拥有分多进程,这些进程可以通过消息传递或信号通信,如果多个节点是连接的,Erlang 进程也可以与其他节点上的进程通信。

想了解更多关于进程和 PCB 的知识,请参考 Chapter 3.

1.3.7. 调度器

调度器负责选择某个 Erlang 进程执行。通常来讲,调度器有2个队列,1个是 ready to run 的进程队列 ready queue ,另一个是等待接受消息的进程队列 waiting queue 。一个 waiting queue 中的进程如果收到了消息,或者接收超时,将被移动到 ready queue 。

调度器从 ready queue 中拿到第一个进程,并将它放到 BEAM 中执行一个_时间片_( time slice)。当时间片耗尽,BEAM会剥夺这个进程的执行,并把它放到 ready queue 的队尾。如果在时间片用尽前,这个进程被 receive 阻塞,他就会被放到 waiting queue 中。

Erlang 天生支持并发,这意味着从概念上讲,每一个进程与其他的进程同时执行,但是事实上,只有1个进程在虚拟机中运行。在多核系统中,Erlang 运行多个调度器,通常每核心一个,每个调度器独有自己的队列。这样 Erlang 获得了真正的并行能力。为了利用多核能力, ERTS 必须使用_SMP_ 被构建 (参见 Appendix A)。 SMP 意即_Symmetric MultiProcessing_,它意味着进程在多核中任意一个核心上运行的能力。

现实世界中,进程优先级等问题会使问题变得更复杂,等待队列使用时间轮实现。所有关于调度器的细节会在 Chapter 11中描述。

1.3.8. Erlang 标签方案

Erlang 是一个动态类型语言,运行时系统需要跟踪所有的数据对象的类型,这是通过标签方案(tagging scheme)完成的。每一个数据对象或指向数据对象的指针同时也会有一个带有其对象数据类型的标签。

一般来说,指针的一些位(bits)会被为标签预留,通过查找对象的标签的位模式(bit pattern),仿真器就可以确定他的数据类型。

这些标签在模式匹配、类型检测、原始操作(primitive operations)和垃圾收集是被使用。

Chapter 4 中完整的描述了标签方案。

1.3.9. 内存处理

Erlang 使用了自动内存管理方案,使得程序员不必担忧内存的分配和回收。每个进程都有可以按需扩容和缩容的堆和栈。

当一个进程出现堆空间不足时,虚拟机会首先尝试通过垃圾回收的方法回收并分配内存。垃圾收集器接下来会找到该进程的栈和堆,并将其中的活动数据复制到一个新的堆中,这样就扔掉了所有死数据。如果做完这些堆空间还是不够用,一个新的更大的堆会被分配出来,活动数据也会被移动到新的堆中。

关于当前的代际复制垃圾收集器的细节,包含被引用计数的 binary 处理,可以在 Chapter 12 章节中找到。

在使用 HiPE (High Performance Erlang ,译者注:类似 JIT ) 兼容本地代码的系统中,每个进程事实上有2个栈,1个 BEAM栈,1个本地代码栈,细节见 Chapter 17 。

1.3.10. 解释器和命令行接口

当你使用 erl 启动 Erlang 节点,可以得到一个命令行提示符。这就是 Erlang read eval print loop (REPL) 或者叫做 command line interface (CLI) 或简称 Erlang shell.

你可以在 Erlang 节点中输入并且在 shell 中直接执行。这种情况,代码不会被编译为 BEAM 码并被 BEAM执行,而是被 Erlang 解释器解析和解释执行。通常,解释后的代码与编译后的代码表现一致,但也存在一些差异,差异和其他方面的问题将在 Chapter 20 介绍。

1.4. 其他的 Erlang 实现

本书主要关注 Ericsson/OTP 实现的“标准” Erlang,即 ERTS。也有一些可用的其他 Erlang 实现,我们将在本节简要提及。

1.4.1. Erlang on Xen

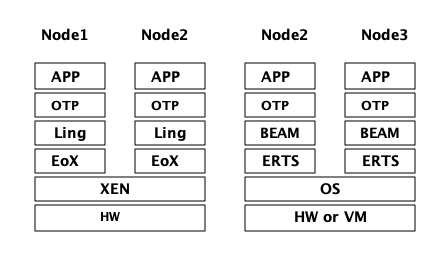

Erlang on Xen (链接: http://erlangonxen.org,译注,网页已经没人维护) 是一个直接在服务器硬件上运行,中间没有操作系统层而只有一个 Xen 客户端薄层的 Erlang 实现。

这个运行在 Xen 上的虚拟机叫做 Ling,他同 BEAM 几乎100%二进制兼容。在 xref:the_eox_stack 中可以看到 Erlang 的 Xen 实现栈与 ERTS 的区别。需要注意的是,Xen 栈上的 Erlang 下没有操作系统。

Ling 实现了 BEAM 通用指令集,他可以重用 OTP 层的 BEAM 编译器来将 Erlang 编译成 Ling 代码。

1.4.2. Erjang

Erjang (链接: http://www.erjang.org,译注,项目已经废弃5年以上,最高支持Java 7) 是一个在 JVM 上运行的 Erlang 实现。它加载+.beam+ 文件后,将其重编译为 Java .class 文件。他与 BEAM 几乎 100% 二进制兼容。

图 xref:the_erjang_stack 中可以看到 Erlang 的 Erjang 实现栈与 ERTS 的区别。需要注意的是,这个方案中 JVM 替代了 BEAM 作为虚拟机,Erjang 在虚拟机上使用 Java 实现 ERTS 提供的服务。

现在,你应该对 ERTS 的各主要部分有了基本的了解,也了解了继续深入各组件所必须的词汇术语。如果你渴望了解某一个具体的组件,现在就可以跳到对应章节阅读了。或者你需要找一个特定问题的解决方案,你可以跳到 Part II 章节,尝试使用各种方法来调优、调试你的系统。

2. 编译器

虽然本书不是一本设计 Erlang 编程语言的书,但是,ERTS 的目标是运行 Erlang 代码,所以你需要了解如何编译 Erlang 代码。本章将涉及到用来生成可读的 BEAM 码的编译器选项,以及如何位生成的 beam 文件增加调试信息。本章的最后,也有一节关于 Elixir 编译器的内容。

那些对于将他们喜爱的语言编译为 ERTS 代码的读者,可以关注本章包含的关于编译器中的中间格式区别的详情,以及如何在 beam 编译器后台挂载你的编译器的信息。

我会展示解析转换,并通过样例来说明如何通过它们来调整 Erlang 语言。

2.1. 编译 Erlang

Erlang 被从 .erl 格式文件的模块源代码,编译成二进制 .beam 文件

编译器可以从操作系统终端,通过 erlc 启动:

> erlc foo.erl编译器也可以在 Erlang 终端中,使用 c 或者调用 compile:file/{1,2} 来调用。

1> c(foo).或者

1> compile:file(foo).compile:file 的第二个可选参数接受编译器选项 list。全部的可选参数清单可以在编译器模块的文档中找到,参见 http://www.erlang.org/doc/man/compile.html 。

通常,编译器会将 Erlang 源代码从 .erl 格式文件,编译并写入到二进制 .beam 文件中。你也可以通过使用编译器的 binary 选项,将编译二进制结果作为Erlang 项式(Erlang term)直接输出。这个选项被重载以用来使用数据来返回中间格式结果,而不是将其写入文件。如果你期望编译器返回Core Erlang 代码,可以使用 [core, binary] 选项。

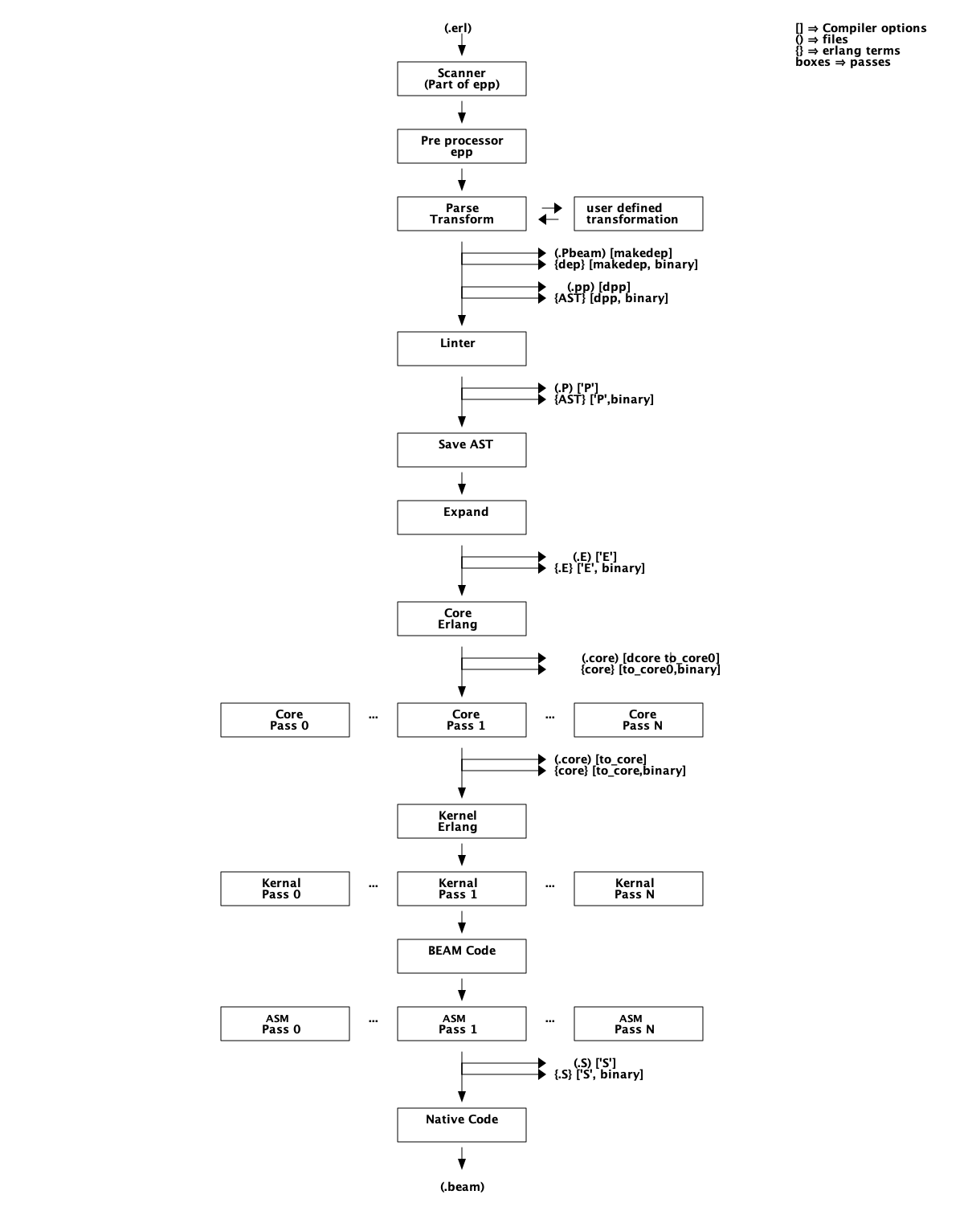

编译器的执行,包含由如图 Figure 6 中所示的若干“遍”(pass)。

如果你想看到完整且最新的编译器的“遍”清单,可以在 Erlang 终端中运行 compile:options/0。当然,有关浏览器的最终信息来源来自于 compile.erl

2.2. 产生中间结果输出

阅读由编译器产生的代码对于试图理解虚拟机如何工作有很大帮助。幸运的是,编译器可以输出每遍后产生的中间代码,以及最终的 beam 码。

我们来尝试一下这些新知识,并且观察一下生成的代码。

1> compile:options().

dpp - Generate .pp file

'P' - Generate .P source listing file...

'E' - Generate .E source listing file

...

'S' - Generate .S file

我们来尝试一个小例子程序 "world.erl":

-module(world).

-export([hello/0]).

-include("world.hrl").

hello() -> ?GREETING.以及包含文件: "world.hrl"

-define(GREETING, "hello world").如果此时使用 'P' 选项编译以得到解析后的文件,你会得到一个 "world.P" 文件。

2> c(world, ['P']).

** Warning: No object file created - nothing loaded **

ok在结果输出的 .P 文件中,你可以看到应用预处理器(解析转换)处理后的美化格式版本的代码:

-file("world.erl", 1).

-module(world).

-export([hello/0]).

-file("world.hrl", 1).

-file("world.erl", 4).

hello() ->

"hello world".要查看所有的源代码转换执行完毕后代码的样子,可以使用 'E' 选项。

3> c(world, ['E']).

** Warning: No object file created - nothing loaded **

ok这将输出一个 .E 文件,其中所有的编译器指令都被移除,并且内建函数 module_info/{1,2} 也被加入到源代码中。

-vsn("\002").

-file("world.erl", 1).

-file("world.hrl", 1).

-file("world.erl", 5).

hello() ->

"hello world".

module_info() ->

erlang:get_module_info(world).

module_info(X) ->

erlang:get_module_info(world, X).我们将在观察 Section 2.3.2 解析转换时,使用 'P' 和 'E' 选项,但首先我们先来看看汇编器生成的 BEAM 码。使用编译器选项 'S' 可以得到一个内容为源代码对应的每条 BEAM 指令的 Erlang 项式的 .S 文件。

3> c(world, ['S']).

** Warning: No object file created - nothing loaded **

okworld.S 文件看起来是这样的:

{module, world}. %% version = 0

{exports, [{hello,0},{module_info,0},{module_info,1}]}.

{attributes, []}.

{labels, 7}.

{function, hello, 0, 2}.

{label,1}.

{line,[{location,"world.erl",6}]}.

{func_info,{atom,world},{atom,hello},0}.

{label,2}.

{move,{literal,"hello world"},{x,0}}.

return.

{function, module_info, 0, 4}.

{label,3}.

{line,[]}.

{func_info,{atom,world},{atom,module_info},0}.

{label,4}.

{move,{atom,world},{x,0}}.

{line,[]}.

{call_ext_only,1,{extfunc,erlang,get_module_info,1}}.

{function, module_info, 1, 6}.

{label,5}.

{line,[]}.

{func_info,{atom,world},{atom,module_info},1}.

{label,6}.

{move,{x,0},{x,1}}.

{move,{atom,world},{x,0}}.

{line,[]}.

{call_ext_only,2,{extfunc,erlang,get_module_info,2}}.因为这是一个由点 (".",译者注:点是每行的结尾) 分隔的 Erlang 项式组成的文件,你可以使用如下命令将这个文件读入 Erlang 终端:

{ok, BEAM_Code} = file:consult("world.S").

汇编码大部分按照原始的源代码格式布局。首条指令定义了代码模块的名称。注释中提到的版本(%% version = 0) 是 beam 操作码格式的版本(由 beam_opcodes 给出的 beam_opcodes:format_number/0) 接下来是一个导出清单以及编译器属性(本例中没有),这和 Erlang 源码模块中的差不多。 第一条像是 beam 指令的是 {labels, 7} ,它告诉虚拟机代码中共有7个标签(label),使得对代码的一遍处理即可为所有的标签分配空间。 接下来是每个函数的实际代码。第一条指令给出了函数名称,标签数表示的参数个数和入口点。 你可以使用 'S' 选项来尽最大努力使你理解 BEAM 如何工作,我们也将在后续章节这么做。当你开发自己的编程语言,通过Core Erlang 编译为 BEAM 码时,能看到生成的代码也是非常有价值的。

2.3. 编译器的遍(Pass)

接下来几节,我们将深入到图 Figure 6 中所示的编译器的各遍。对于面向 BEAM 的编程语言设计者,这些内容将向你展示使用 宏(macros),解析转换(parse transforms),Core Erlang,BEAM 码等不同方法你可以做什么,以及它们之间是如何相互依赖的。 在调优 Erlang 代码时,通过查看优化前后的生成代码,来了解何种优化在何时,以何种方式起作用是非常有效的。

2.3.1. 编译器 Pass: Erlang 预处理器 (epp)

编译过程起始于一个组合的分词器(或者扫描器)和预处理器。预处理器驱动分词器运行。这意味着宏被以符号的方式展开,而不纯粹是字符串替换(不像是 m4 或 cpp)。你不能够使用 Erlang 宏来定义自己的语法,宏像一个与周围字符独立的符号一样被展开。所以你也不能将一个宏与(它前后连续的)字符连接为新的符号:

-define(plus,+).

t(A,B) -> A?plus+B.This will expand to

t(A,B) -> A + + B.

and not

t(A,B) -> A ++ B.

另一方面,由于宏展开实在符号级别完成的,宏的右值(rhs)也不必是一个合法的 Erlang 项式,例如:

-define(p,o, o]). t() -> [f,?p.

这除了能帮你赢得 Erlang 混乱代码大赛之外,没什么真实用处。记住这个知识的主要用途是,你不能使用 Erlang 预处理器来定义一个与 Erlang 句法不同的编程语言。幸运的是,你可以用其他手段定义新语言,我们将在后文看到这些内容。

2.3.2. 编译器 Pass: 解析转换(Parse Transformations)

调整Erlang语言最简单的方法是通过解析转换(Parse Transformations 或 parse transforms)。解析转换带有各种各样的警告,比如OTP文档中的注释:

| Programmers are strongly advised not to engage in parse transformations and no support is offered for problems encountered. |

当你使用了解析转换,你基本上在写一个额外的编译器“pass”,如果不小心的话,可能会导致意外的结果。你需要在使用解析转换的模块声明对它的使用,这对模块来说是本地的,这样对编译器的调整也比较安全。在我看来,应用解析转换最大的问题在于你自己发明的句法,这可能对别人阅读代码造成许多困难。至少在你的解析转换与广受欢迎的 QLC 等齐名前都如此。

好吧,所以你知道你不应该使用它,但如果你必须使用,你得知道它是什么。解析转换是在抽象语法树(AST)(参见 http://www.erlang.org/doc/apps/erts/absform.html)上运行的函数。编译器依次做预处理,符号化和解析,然后它会用 AST 调用解析转换函数,并期望返回新的AST。

这意味着您不能从根本上改变 Erlang 句法,但是您可以更改语义。举个例子,假如你想在 Erlang 代码中直接写json代码,你也很幸运,因为 json 和 Erlang 的标记是基本上是一样的。另外,由于 Erlang 编译器在解析转换后的 linter pass 才会做大部分的完整性检查工作,所以,可以允许一个不代表有效Erlang的 AST。

要编写解析转换,您需要编写一个Erlang模块(让我们称它为_p_),它导出函数+parse_transform/2+。如果这个模块(我们称其为_m_)的编译过程包含 + {parse_transform p} + 编译器选项,这个函数就会在解析转换 pass 期间被编译器调用。函数的参数是模块 m 的 AST 和调用的编译器时的编译器选项。

|

注意,您不能从文件中给出的任何编译器选项。因为你不能够从代码来给出(编译器)选项,还真有点麻烦。 编译器直到发生在解析转换后的 expand pass才会展开编译器选项。 |

抽象格式的文档确实有些密集,我们很难通过阅读来掌握抽象格式文档。我鼓励您使用句法工具(syntax_tools),特别是 erl_syntax_lib 用于处理AST上的任何重要工作。

在这里,为帮助我们理解,我们将开发一个简单的解析转换例子来理解AST。我们将直接在 AST 上工作,使用老的可靠的 io:format 方法来代替句法工具(syntax_tools)。

首先,我们创建一个可以编译 json_test.erl 的例子:

-module(json_test).

-compile({parse_transform, json_parser}).

-export([test/1]).

test(V) ->

<<{{

"name" : "Jack (\"Bee\") Nimble",

"format": {

"type" : "rect",

"widths" : [1920,1600],

"height" : (-1080),

"interlace" : false,

"frame rate": V

}

}}>>.然后,我们创建一个最小化的解析转换模块 json_parser.erl :

-module(json_parser).

-export([parse_transform/2]).

parse_transform(AST, _Options) ->

io:format("~p~n", [AST]),

AST.这个有代表性的解析转换返回了未经改变的 AST,同时将其打印出来,这样你可以观察 AST 到底是什么样子的。

> c(json_parser).

{ok,json_parser}

2> c(json_test).

[{attribute,1,file,{"./json_test.erl",1}},

{attribute,1,module,json_test},

{attribute,3,export,[{test,1}]},

{function,5,test,1,

[{clause,5,

[{var,5,'V'}],

[],

[{bin,6,

[{bin_element,6,

{tuple,6,

[{tuple,6,

[{remote,7,{string,7,"name"},{string,7,"Jack (\"Bee\") Nimble"}},

{remote,8,

{string,8,"format"},

{tuple,8,

[{remote,9,{string,9,"type"},{string,9,"rect"}},

{remote,10,

{string,10,"widths"},

{cons,10,

{integer,10,1920},

{cons,10,{integer,10,1600},{nil,10}}}},

{remote,11,{string,11,"height"},{op,11,'-',{integer,11,1080}}},

{remote,12,{string,12,"interlace"},{atom,12,false}},

{remote,13,{string,13,"frame rate"},{var,13,'V'}}]}}]}]},

default,default}]}]}]},

{eof,16}]

./json_test.erl:7: illegal expression

./json_test.erl:8: illegal expression

./json_test.erl:5: Warning: variable 'V' is unused

error

因为模块包含无效的 Erlang 语法,故编译+json_test+失败,但是你可以看到AST是什么样子的。现在我们可以编写一些函数来遍历 AST 并将 json 代码回写到 Erlang 代码中。[1]

-module(json_parser).

-export([parse_transform/2]).

parse_transform(AST, _Options) ->

json(AST, []).

-define(FUNCTION(Clauses), {function, Label, Name, Arity, Clauses}).

%% We are only interested in code inside functions.

json([?FUNCTION(Clauses) | Elements], Res) ->

json(Elements, [?FUNCTION(json_clauses(Clauses)) | Res]);

json([Other|Elements], Res) -> json(Elements, [Other | Res]);

json([], Res) -> lists:reverse(Res).

%% We are interested in the code in the body of a function.

json_clauses([{clause, CLine, A1, A2, Code} | Clauses]) ->

[{clause, CLine, A1, A2, json_code(Code)} | json_clauses(Clauses)];

json_clauses([]) -> [].

-define(JSON(Json), {bin, _, [{bin_element

, _

, {tuple, _, [Json]}

, _

, _}]}).

%% We look for: <<"json">> = Json-Term

json_code([]) -> [];

json_code([?JSON(Json)|MoreCode]) -> [parse_json(Json) | json_code(MoreCode)];

json_code(Code) -> Code.

%% Json Object -> [{}] | [{Label, Term}]

parse_json({tuple,Line,[]}) -> {cons, Line, {tuple, Line, []}};

parse_json({tuple,Line,Fields}) -> parse_json_fields(Fields,Line);

%% Json Array -> List

parse_json({cons, Line, Head, Tail}) -> {cons, Line, parse_json(Head),

parse_json(Tail)};

parse_json({nil, Line}) -> {nil, Line};

%% Json String -> <<String>>

parse_json({string, Line, String}) -> str_to_bin(String, Line);

%% Json Integer -> Intger

parse_json({integer, Line, Integer}) -> {integer, Line, Integer};

%% Json Float -> Float

parse_json({float, Line, Float}) -> {float, Line, Float};

%% Json Constant -> true | false | null

parse_json({atom, Line, true}) -> {atom, Line, true};

parse_json({atom, Line, false}) -> {atom, Line, false};

parse_json({atom, Line, null}) -> {atom, Line, null};

%% Variables, should contain Erlang encoded Json

parse_json({var, Line, Var}) -> {var, Line, Var};

%% Json Negative Integer or Float

parse_json({op, Line, '-', {Type, _, N}}) when Type =:= integer

; Type =:= float ->

{Type, Line, -N}.

%% parse_json(Code) -> io:format("Code: ~p~n",[Code]), Code.

-define(FIELD(Label, Code), {remote, L, {string, _, Label}, Code}).

parse_json_fields([], L) -> {nil, L};

%% Label : Json-Term --> [{<<Label>>, Term} | Rest]

parse_json_fields([?FIELD(Label, Code) | Rest], _) ->

cons(tuple(str_to_bin(Label, L), parse_json(Code), L)

, parse_json_fields(Rest, L)

, L).

tuple(E1, E2, Line) -> {tuple, Line, [E1, E2]}.

cons(Head, Tail, Line) -> {cons, Line, Head, Tail}.

str_to_bin(String, Line) ->

{bin

, Line

, [{bin_element

, Line

, {string, Line, String}

, default

, default

}

]

}.现在,我们可以无错的将 json_test 编译通过了:

1> c(json_parser).

{ok,json_parser}

2> c(json_test).

{ok,json_test}

3> json_test:test(42).

[{<<"name">>,<<"Jack (\"Bee\") Nimble">>},

{<<"format">>,

[{<<"type">>,<<"rect">>},

{<<"widths">>,[1920,1600]},

{<<"height">>,-1080},

{<<"interlace">>,false},

{<<"frame rate">>,42}]}]由 parse_transform/2 产生的 AST 必须是合法的 Erlang 代码,除非是你做多个解析转换。(译注:指多次解析转换的中间结果AST),代码的合法性检查是在下边的编译 pass 进行的。

2.3.3. 编译器 Pass: Linter

Linter 为句法正确但是不好的代码生成警告,类似"export_all flag enabled"

2.3.4. 编译器 Pass: 保存抽象语法树(AST)

为了启用对某模块的调试,您可以“调试编译”该模块,即将选项 debug_info 传递给编译器。抽象语法树将被“Save AST”保存,直到编译结束时,它将被写入.beam文件。

重要的是,要注意代码是在任意优化被应用前保存的,所以如果编译器的优化 pass 有 bug,你将在调试器中运行代码时得到不同的行为。如果你正在实现你自己的编译器这可能会把你搞糊涂。

2.3.5. 编译器 Pass: Expand

在扩展(Expand)阶段,诸如 record 等源 erlang 结构将被扩展为底层的 erlang 结构。编译器选项 "-compile(...)" 也会被 扩展 为元数据。

2.3.6. 编译器 Pass: Core Erlang

Core Erlang 是一种适用于编译器优化的严格函数式语言。通过减少表示同一操作的方法的数量,使代码转换更容易。其中一种方法是通过引入 let 和 letrec 表达式来使作用域更明确。

核心Erlang是一种适用于编译器优化的严格函数式语言。通过减少表示同一操作的方法的数量,使代码转换更容易。其中一种方法是通过引入 let 和 letrec 表达式来使作用域更明确。

对于希望在 ERTS 中运行的语言来说,Core Erlang 是最好的目标。它很少更改,并且以一种干净的方式包含了 Erlang 的所有方面。如果您直接针对beam指令集,您将不得不处理更多的细节,并且该指令集通常在每个主要的ERTS版本之间略有变化。另一方面,如果您直接以Erlang为目标,那么您可以描述的内容将受到更大的限制,而且您还必须处理更多的细节,因为 Core Erlang 是一种更干净的语言。

你可以使用 “to_core” 选项来将 Erlang 文件编译为 core erlang,但请注意,这将把 Core Erlang 程序写入带有 “.core" 扩展名的文件。你可以通过编译器选项 "from_core" 来编译来自带有 “.core" 扩展名的 core erlang 文件。

1> c(world, to_core).

** Warning: No object file created - nothing loaded **

ok

2> c(world, from_core).

{ok,world}

注意 .core 文件是用人类可读的 core 格式编写的文本文件。要获得作为 Erlang 项式的核心程序,可以在编译中添加+binary+选项。

2.3.7. 编译器 Pass: Kernel Erlang

Kernel Erlang 是 Core Erlang 的一个扁平版本,它们有一些不同之处。例如,每个变量在一个完整的函数作用域中都是唯一的。模式匹配被编译成更原始的操作。

2.3.8. 编译器 Pass: BEAM 码

正常编译的最后一步是外部 beam 码格式。一些底层的优化,如死代码块消除和窥孔优化是在这个级别上完成的。

BEAM 码在 Chapter 7 和 Appendix B 中有详细描述。

2.3.9. 编译器 Pass: 本地(Native)码

如果您在编译中添加了 native 标志,并且您有一个启用了 HiPE (High Performance Erlang ,译者注:类似 JIT ) 的运行时系统,那么编译器将为您的模块生成本机代码,并将本地代码与 beam 代码一起存储在 .beam 文件中。

2.4. 其他编译器工具

有许多工具可以帮助您处理代码生成和代码操作。这些工具是用 Erlang 编写的,但并不是运行时系统的一部分,但是如果你想在 BEAM 之上实现另一种语言,了解它们是非常好的。

在本节中,我们将介绍三个最有用的代码工具: 词法分析器 (Leex)、解析器生成器(Yecc),和一组用于操作(语言)抽象形式的通用函数(Syntax Tools)。

2.4.1. Leex

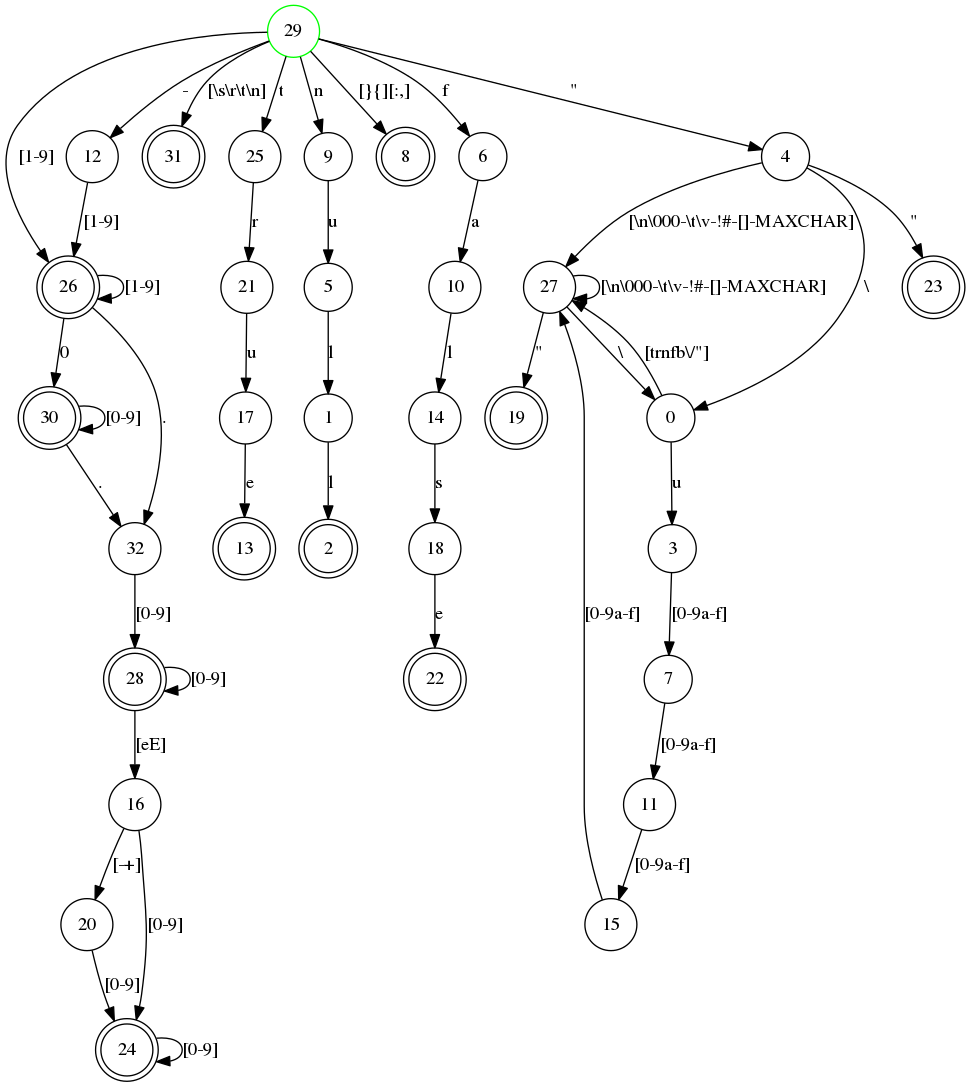

Leex是Erlang 词法分析器生成器。词法分析器生成器从定义文件 xrl 获取 DFA (译注,DFA 是 Deterministic Finite Automaton 的简称,形式语言术语,译为 确定有限自动机)的描述,并生成一个与 DFA 描述的符号相匹配 Erlang 程序。

关于如何为分词器编写 DFA 定义的细节已经超出了本书的范围。要得到详细的解释,我推荐 “龙书”。(是指 Aho, Sethi 和 Ullman合著的 《Compiler》)。其他好的资源包括激发了 leex 灵感的 “flex” 程序的手册,以及 leex 文档本身。如果你已经安装了 flex,你可以通过输入以下命令来阅读完整的手册:

> info flex

在线 Erlang 文档也有 leex 手册 (参见 yecc.html)。

我们可以使用词法分析器生成器创建一个识别 JSON 符号的 Erlang 程序。通过查看JSON定义 link:http://www.ecma-international.org/publications/files/ECMA-ST/ECMA-404.pdf 我们可以看到,我们只需要处理少量的令牌。

Definitions.

Digit = [0-9]

Digit1to9 = [1-9]

HexDigit = [0-9a-f]

UnescapedChar = [^\"\\]

EscapedChar = (\\\\)|(\\\")|(\\b)|(\\f)|(\\n)|(\\r)|(\\t)|(\\/)

Unicode = (\\u{HexDigit}{HexDigit}{HexDigit}{HexDigit})

Quote = [\"]

Delim = [\[\]:,{}]

Space = [\n\s\t\r]

Rules.

{Quote}{Quote} : {token, {string, TokenLine, ""}}.

{Quote}({EscapedChar}|({UnescapedChar})|({Unicode}))+{Quote} :

{token, {string, TokenLine, drop_quotes(TokenChars)}}.

null : {token, {null, TokenLine}}.

true : {token, {true, TokenLine}}.

false : {token, {false, TokenLine}}.

{Delim} : {token, {list_to_atom(TokenChars), TokenLine}}.

{Space} : skip_token.

-?{Digit1to9}+{Digit}*\.{Digit}+((E|e)(\+|\-)?{Digit}+)? :

{token, {number, TokenLine, list_to_float(TokenChars)}}.

-?{Digit1to9}+{Digit}* :

{token, {number, TokenLine, list_to_integer(TokenChars)+0.0}}.

Erlang code.

-export([t/0]).

drop_quotes([$" | QuotedString]) -> literal(lists:droplast(QuotedString)).

literal([$\\,$" | Rest]) ->

[$"|literal(Rest)];

literal([$\\,$\\ | Rest]) ->

[$\\|literal(Rest)];

literal([$\\,$/ | Rest]) ->

[$/|literal(Rest)];

literal([$\\,$b | Rest]) ->

[$\b|literal(Rest)];

literal([$\\,$f | Rest]) ->

[$\f|literal(Rest)];

literal([$\\,$n | Rest]) ->

[$\n|literal(Rest)];

literal([$\\,$r | Rest]) ->

[$\r|literal(Rest)];

literal([$\\,$t | Rest]) ->

[$\t|literal(Rest)];

literal([$\\,$u,D0,D1,D2,D3|Rest]) ->

Char = list_to_integer([D0,D1,D2,D3],16),

[Char|literal(Rest)];

literal([C|Rest]) ->

[C|literal(Rest)];

literal([]) ->[].

t() ->

{ok,

[{'{',1},

{string,2,"no"},

{':',2},

{number,2,1.0},

{'}',3}

],

4}.通过使用 Leex 编译器,我们可以将这个 DFA 编译为 Erlang 代码,并且通过提供 dfa_graph 选项,我们还可以生成一个 dot-file,可以用 Graphviz 查看。

1> leex:file(json_tokens, [dfa_graph]).

{ok, "./json_tokens.erl"}

2>你可以通过 dotty 来查看 DFA 图。

> dotty json_tokens.dot

我们可以在示例 json 文件 (test.json) 上尝试分词器。

{

"no" : 1,

"name" : "Jack \"Bee\" Nimble",

"escapes" : "\b\n\r\t\f\//\\",

"format": {

"type" : "rect",

"widths" : [1920,1600],

"height" : -1080,

"interlace" : false,

"unicode" : "\u002f",

"frame rate": 4.5

}

}

首先,我们需要编译分词器,然后读取文件并将其转换为字符串。最后,我们可以使用 leex 生成的 string/1 函数来将测试文件分词。

2> c(json_tokens).

{ok,json_tokens}.

3> f(File), f(L), {ok, File} = file:read_file("test.json"), L = binary_to_list(File), ok.

ok

4> f(Tokens), {ok, Tokens,_} = json_tokens:string(L), hd(Tokens).

{'{',1}

5>shell 函数 f/1 告诉终端忘记变量绑定。如果您想尝试多次绑定变量的命令,例如在编写 lexer 并希望在每次重写后尝试它的场景下,这是很有用的。有关 shell 命令的细节将在后面的章节中介绍。

有了 JSON 的分词器,我们现在可以使用解析器生成器 Yecc 来编写一个 JSON 解析器了。

2.4.2. Yecc

Yecc 是 Erlang 的解析器生成器。该名称来自Yacc (Yet another compiler compiler),它是 C 的经典的解析器生成器。

现在我们有了一个用于 JSON 项式的词法分析器,我们就可以使用 yecc 编写一个解析器。

Nonterminals value values object array pair pairs.

Terminals number string true false null '[' ']' '{' '}' ',' ':'.

Rootsymbol value.

value -> object : '$1'.

value -> array : '$1'.

value -> number : get_val('$1').

value -> string : get_val('$1').

value -> 'true' : get_val('$1').

value -> 'null' : get_val('$1').

value -> 'false' : get_val('$1').

object -> '{' '}' : #{}.

object -> '{' pairs '}' : '$2'.

pairs -> pair : '$1'.

pairs -> pair ',' pairs : maps:merge('$1', '$3').

pair -> string ':' value : #{ get_val('$1') => '$3' }.

array -> '[' ']' : {}.

array -> '[' values ']' : list_to_tuple('$2').

values -> value : [ '$1' ].

values -> value ',' values : [ '$1' | '$3' ].

Erlang code.

get_val({_,_,Val}) -> Val;

get_val({Val, _}) -> Val.然后,我们可以使用 yecc 生成一个实现解析器的 Erlang 程序,并调用 parse/1 函数,该函数使用由分词器生成的记号作为参数。

5> yecc:file(yecc_json_parser), c(yecc_json_parser).

{ok,yexx_json_parser}

6> f(Json), {ok, Json} = yecc_json_parser:parse(Tokens).

{ok,#{"escapes" => "\b\n\r\t\f////",

"format" => #{"frame rate" => 4.5,

"height" => -1080.0,

"interlace" => false,

"type" => "rect",

"unicode" => "/",

"widths" => {1920.0,1.6e3}},

"name" => "Jack \"Bee\" Nimble",

"no" => 1.0}}当您希望将自己的完整语言编译到 Erlang 虚拟机时,Leex 和 Yecc 工具非常适合。通过将它们与语法工具 (特别是 Merl ) 结合使用,您可以操作 Erlang 抽象语法树,以生成 Erlang 代码或更改 Erlang 代码的行为。

2.5. 语法工具和 Merl

语法工具是一组库,用于操作 Erlang 抽象语法树 (AST) 的内部表示。

语法工具应用程序还包括自 Erlang 18.0 以来的工具 Merl。你可以使用 Merl 来非常容易地操作语法树,并用 Erlang 代码编写解析转换。

您可以在 Erlang.org 站点上找到语法工具的文档 http://erlang.org/doc/apps/syntax_tools/chapter.html。

2.6. 编译 Elixir

在 Beam 上编写自己的编程语言的另一种方法,是使用 Elixir 中的元编程工具。Elixir 通过 Erlang 抽象语法树编译 Beam 代码。

使用 Elixir 的 defmacro,您可以直接在 Elixir 中定义您自己的领域特定语言(DSL)。

3. 进程

轻量级进程的概念是 Erlang 和 BEAM 的本质;它使 BEAM 从其他虚拟机中脱颖而出。为了理解 BEAM (以及 Erlang 和 Elixir )是如何工作的,您需要了解进程是如何工作的细节,这将帮助您理解 BEAM 的核心概念,包括对进程来说什么是容易且低成本的,什么是困难且昂贵的。

BEAM 中的几乎所有内容都与进程的概念有关,在本章中,我们将进一步了解这些关系。我们将对 Chapter 1 部分的内容进行扩展,并更深入地了解一些概念,如内存管理、消息传递,特别是调度。

Erlang 进程与操作系统进程非常相似。它有自己的地址空间,它可以通过信号和消息与其他进程通信,并且执行是由抢占式调度程序控制的。

当你的 Erlang 或 Elixir 系统中出现性能问题时,这个问题通常是由特定进程中的问题或进程之间的不平衡引起的。当然还有其他常见的问题,如糟糕的算法或内存问题,这些内容将在其他章节中涉及到。能够查明导致问题的进程始终是重要的,因此我们将研究 Erlang 运行时系统中用于进程检查的可用工具。

我们将在本章中介绍这些工具,通过它们了解进程和调度器是如何工作的,然后我们将把所有工具放在一起作为最后的练习。

3.1. 什么是进程?

进程是相互隔离的实体,代码的执行就发生在其中。进程通过隔离错误对执行有缺陷代码的进程的影响,来保护系统不受代码中的错误影响。

运行时提供了许多检查进程的工具,帮助我们发现瓶颈、问题和资源的过度使用。这些工具将帮助您识别和检查有问题的进程。

3.1.1. 从终端获得进程列表

让我们来看看在运行的系统中有哪些进程。最简单的方法是启动一个 Erlang 终端并发出 shell 命令 ` i() ` 。在 Elixir 中,您可以像 :shell_default.i 这样来调用 ` shell_default ` 模块中的 i/0 函数。

$ erl

Erlang/OTP 19 [erts-8.1] [source] [64-bit] [smp:4:4] [async-threads:10]

[hipe] [kernel-poll:false]

Eshell V8.1 (abort with ^G)

1> i().

Pid Initial Call Heap Reds Msgs

Registered Current Function Stack

<0.0.0> otp_ring0:start/2 376 579 0

init init:loop/1 2

<0.1.0> erts_code_purger:start/0 233 4 0

erts_code_purger erts_code_purger:loop/0 3

<0.4.0> erlang:apply/2 987 100084 0

erl_prim_loader erl_prim_loader:loop/3 5

<0.30.0> gen_event:init_it/6 610 226 0

error_logger gen_event:fetch_msg/5 8

<0.31.0> erlang:apply/2 1598 416 0

application_controlle gen_server:loop/6 7

<0.33.0> application_master:init/4 233 64 0

application_master:main_loop/2 6

<0.34.0> application_master:start_it/4 233 59 0

application_master:loop_it/4 5

<0.35.0> supervisor:kernel/1 610 1767 0

kernel_sup gen_server:loop/6 9

<0.36.0> erlang:apply/2 6772 73914 0

code_server code_server:loop/1 3

<0.38.0> rpc:init/1 233 21 0

rex gen_server:loop/6 9

<0.39.0> global:init/1 233 44 0

global_name_server gen_server:loop/6 9

<0.40.0> erlang:apply/2 233 21 0

global:loop_the_locker/1 5

<0.41.0> erlang:apply/2 233 3 0

global:loop_the_registrar/0 2

<0.42.0> inet_db:init/1 233 209 0

inet_db gen_server:loop/6 9

<0.44.0> global_group:init/1 233 55 0

global_group gen_server:loop/6 9

<0.45.0> file_server:init/1 233 79 0

file_server_2 gen_server:loop/6 9

<0.46.0> supervisor_bridge:standard_error/ 233 34 0

standard_error_sup gen_server:loop/6 9

<0.47.0> erlang:apply/2 233 10 0

standard_error standard_error:server_loop/1 2

<0.48.0> supervisor_bridge:user_sup/1 233 54 0

gen_server:loop/6 9

<0.49.0> user_drv:server/2 987 1975 0

user_drv user_drv:server_loop/6 9

<0.50.0> group:server/3 233 40 0

user group:server_loop/3 4

<0.51.0> group:server/3 987 12508 0

group:server_loop/3 4

<0.52.0> erlang:apply/2 4185 9537 0

shell:shell_rep/4 17

<0.53.0> kernel_config:init/1 233 255 0

gen_server:loop/6 9

<0.54.0> supervisor:kernel/1 233 56 0

kernel_safe_sup gen_server:loop/6 9

<0.58.0> erlang:apply/2 2586 18849 0

c:pinfo/1 50

Total 23426 220863 0

222

oki/0 函数输出系统中所有进程的列表。其中每个进程的信息输出2行。整个输出的前两行是标题区域,说明输出信息的含义。可以看到,您获得了进程 ID (Pid) 和进程名称(如果有的话),以及关于进程的入口函数和正在执行的函数代码的信息。您还可以获得关于堆和栈的大小,以及进程的规约值(reductions,译注,一个调度相关的计数,将在后边详述)和消息的数量信息。在本章的其余部分,我们将详细了解什么是栈、堆、规约值和消息。现在我们可以假设,如果堆大小的值很大,那么说明进程使用了很多内存,而如果规约值很大,说明进程就执行了很多代码。

我们可以用 i/3 函数进一步检查进程。让我们看一下 code_server 进程。我们可以在前面的列表中看到, code_server 的进程标识符 ( pid ) 是 <0.36.0>。通过 pid 的三个数字调用 i/3 ,我们得到以下信息:

2> i(0,36,0).

[{registered_name,code_server},

{current_function,{code_server,loop,1}},

{initial_call,{erlang,apply,2}},

{status,waiting},

{message_queue_len,0},

{messages,[]},

{links,[<0.35.0>]},

{dictionary,[]},

{trap_exit,true},

{error_handler,error_handler},

{priority,normal},

{group_leader,<0.33.0>},

{total_heap_size,46422},

{heap_size,46422},

{stack_size,3},

{reductions,93418},

{garbage_collection,[{max_heap_size,#{error_logger => true,

kill => true,

size => 0}},

{min_bin_vheap_size,46422},

{min_heap_size,233},

{fullsweep_after,65535},

{minor_gcs,0}]},

{suspending,[]}]

3>我们从这个调用中得到了很多信息,在本章的其余部分,我们将详细了解这些信息的含义。

第一行告诉我们,进程被命名为`code_server`。接下来,在 current_function 中我们可以看到进程当前正在执行或挂起的函数,在 initial_call 中,可以看到进程开始执行的入口函数名称。

我们还可以看到,当前进程被挂起等待消息( {status,waiting} ),并且在没有消息在邮箱中 ({message_queue_len,0}, {messages,[]})。在本章的后面,我们将进一步了解消息传递的工作原理。

字段 priority, suspending, reductions, links, trap_exit, error_handler,和 group_leader 控制进程执行、错误处理和 IO。在介绍 Observer 时,我们将对此进行更深入的研究。

最后几个字段 (dictionary, total_heap_size, heap_size, stack_size,和 garbage_collection) 提供了进程内存使用情况的信息。我们将在 Chapter 12 章节中详细讨论进程内存区域。

另一种获取进程信息的更直接的方法是使用 BREAK 菜单: ctrl+c p [enter] 提供的进程信息。注意,当处于 BREAK 状态时,整个节点都会冻结。

3.1.2. 程序化的进程探查

shell 函数只打印有关进程的信息,但实际上这些信息可以作为数据形式获取到,因此您可以编写自己的工具来检查进程。您可以通过`erlang:processes/0` 获得所有进程的列表,并通过 erlang:process_info/1 获得某个进程的更多信息。我们也可以使用函数 whereis/1 来用进程名获得它的pid:

1> Ps = erlang:processes().

[<0.0.0>,<0.1.0>,<0.4.0>,<0.30.0>,<0.31.0>,<0.33.0>,

<0.34.0>,<0.35.0>,<0.36.0>,<0.38.0>,<0.39.0>,<0.40.0>,

<0.41.0>,<0.42.0>,<0.44.0>,<0.45.0>,<0.46.0>,<0.47.0>,

<0.48.0>,<0.49.0>,<0.50.0>,<0.51.0>,<0.52.0>,<0.53.0>,

<0.54.0>,<0.60.0>]

2> CodeServerPid = whereis(code_server).

<0.36.0>

3> erlang:process_info(CodeServerPid).

[{registered_name,code_server},

{current_function,{code_server,loop,1}},

{initial_call,{erlang,apply,2}},

{status,waiting},

{message_queue_len,0},

{messages,[]},

{links,[<0.35.0>]},

{dictionary,[]},

{trap_exit,true},

{error_handler,error_handler},

{priority,normal},

{group_leader,<0.33.0>},

{total_heap_size,24503},

{heap_size,6772},

{stack_size,3},

{reductions,74260},

{garbage_collection,[{max_heap_size,#{error_logger => true,

kill => true,

size => 0}},

{min_bin_vheap_size,46422},

{min_heap_size,233},

{fullsweep_after,65535},

{minor_gcs,33}]},

{suspending,[]}]以数据方式获取进程信息后,我们可以按自己的意愿编写代码来分析或排序数据。如果我们 (使用 erlang:processes/0) 抓取系统中的所有进程,然后 (使用 erlang:process_info(P,total_heap_size)) 获取每个进程的堆大小信息,我们就可以构造一个包含 pid 和堆大小的列表,并根据堆大小对其排序:

1> lists:reverse(lists:keysort(2,[{P,element(2,

erlang:process_info(P,total_heap_size))}

|| P <- erlang:processes()])).

[{<0.36.0>,24503},

{<0.52.0>,21916},

{<0.4.0>,12556},

{<0.58.0>,4184},

{<0.51.0>,4184},

{<0.31.0>,3196},

{<0.49.0>,2586},

{<0.35.0>,1597},

{<0.30.0>,986},

{<0.0.0>,752},

{<0.33.0>,609},

{<0.54.0>,233},

{<0.53.0>,233},

{<0.50.0>,233},

{<0.48.0>,233},

{<0.47.0>,233},

{<0.46.0>,233},

{<0.45.0>,233},

{<0.44.0>,233},

{<0.42.0>,233},

{<0.41.0>,233},

{<0.40.0>,233},

{<0.39.0>,233},

{<0.38.0>,233},

{<0.34.0>,233},

{<0.1.0>,233}]

2>您可能会注意到,许多进程的堆大小为233,这是因为它是进程默认的起始堆大小。

请参阅模块 erlang 的文档 process_info,以获得信息的完整描述。

请注意, process_info/1 函数只返回进程可用的所有信息的子集,以及`process_info/2` 函数用于获取额外信息。例如,要提取上面 code_server 进程的 backtrace ,我们可以运行:

3> process_info(whereis(code_server), backtrace).

{backtrace,<<"Program counter: 0x00000000161de900 (code_server:loop/1 + 152)\nCP: 0x0000000000000000 (invalid)\narity = 0\n\n0"...>>}看到上面信息末端的三个点了吗?这意味着输出被截断了。查看整个值的一个有用的技巧是使用 rp/1 函数包装上面的函数调用:

4> rp(process_info(whereis(code_server), backtrace)).另一种方法是使用 io:put_chars/1 函数,如下所示:

5> {backtrace, Backtrace} = process_info(whereis(code_server), backtrace).

{backtrace,<<"Program counter: 0x00000000161de900 (code_server:loop/1 + 152)\nCP: 0x0000000000000000 (invalid)\narity = 0\n\n0"...>>}

6> io:put_chars(Backtrace).由于其冗长,这里没有包含命令 4> 和 6> 的输出,请在 Erlang shell 中尝试以上命令。

3.1.3. 使用 Observer 检查进程

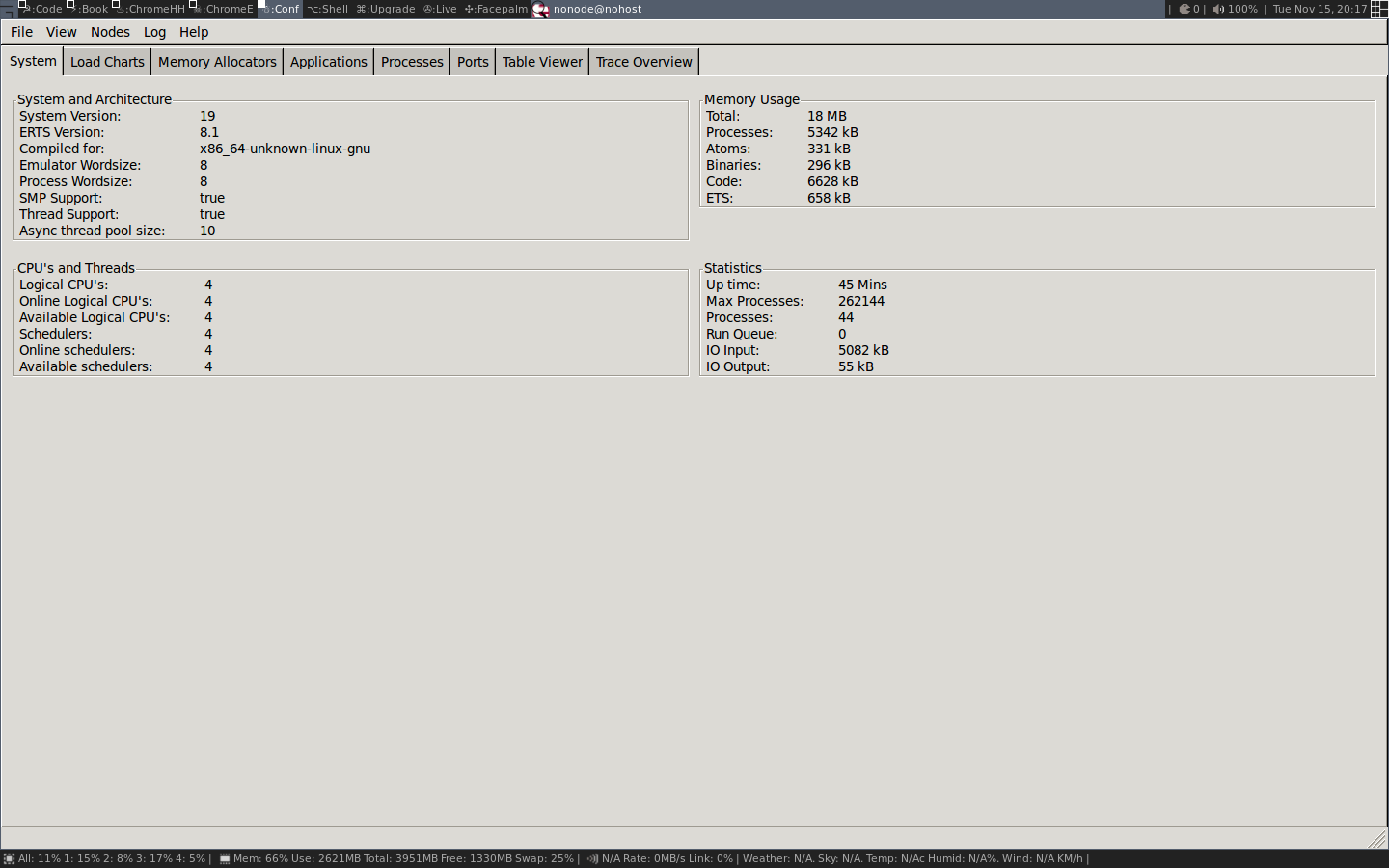

第三种检查进程的方法是使用 Observer。Observer 是一个用于检查 Erlang 运行时系统的扩展图形界面。在本书中,我们将使用观察者来检查系统的不同方面。

观察者可以从操作系统终端启动并连接到 Erlang 节点,也可以直接从 Elixir 或 Erlang shell 启动。现在我们在 Elixir shell 中使用 :observer.start 来启动观察者。或者在 Erlang shell 中使用:

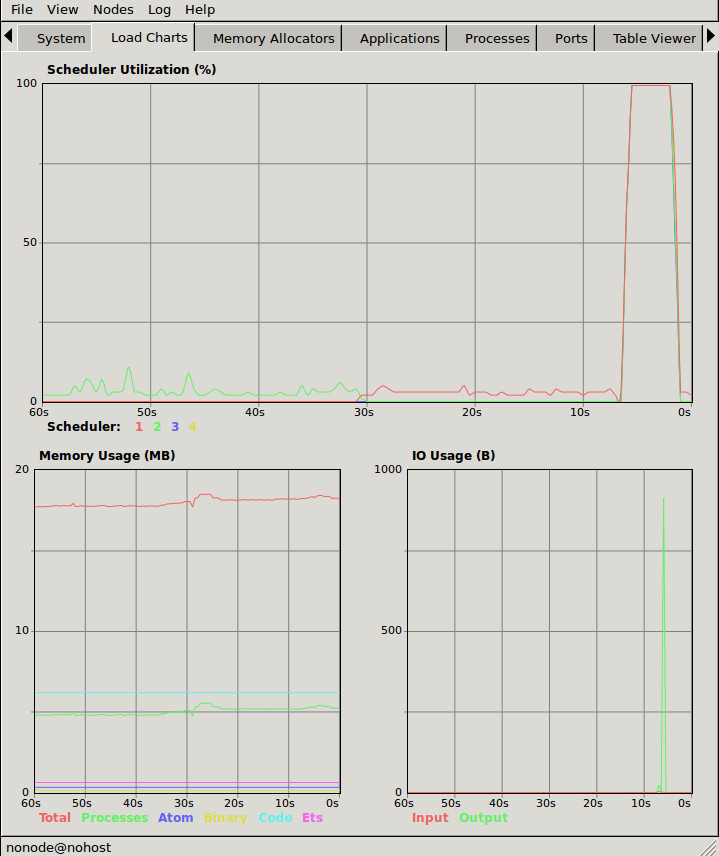

7> observer:start().当 Observer 启动时,它会显示一个系统概览,如下截图:

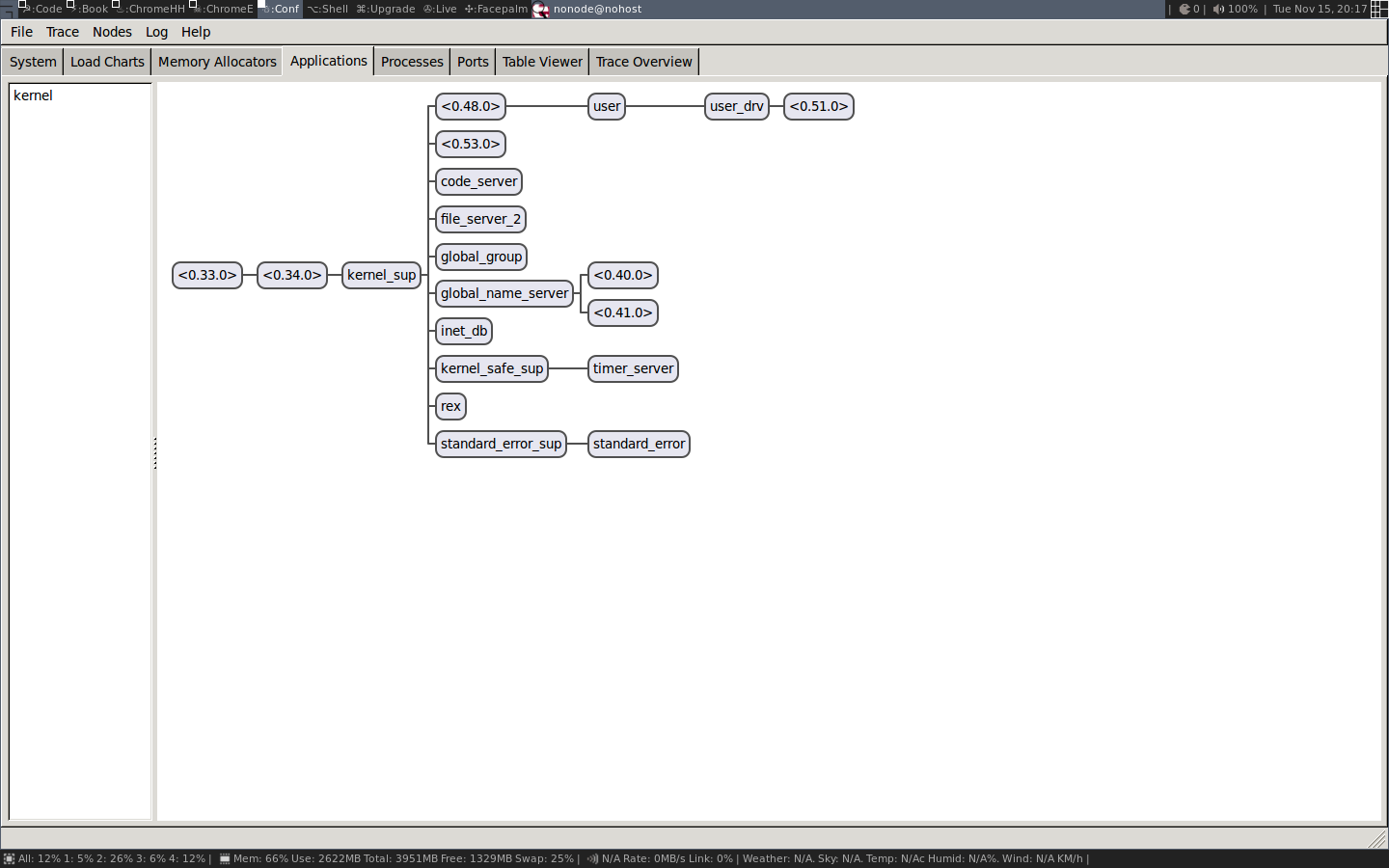

我们将在本章和下一章中详细讨论这些信息。现在我们只用Observer来观察正在运行中的进程。首先我们看一下 Applications 标签,它显示了运行系统的监督树:

在这里,我们得到了流程如何链接的图形视图。这是一种用来了解系统被如何构建的好方法。您还会很好的感觉到,进程就像漂浮在空间中的孤立实体通过链接相互连接。

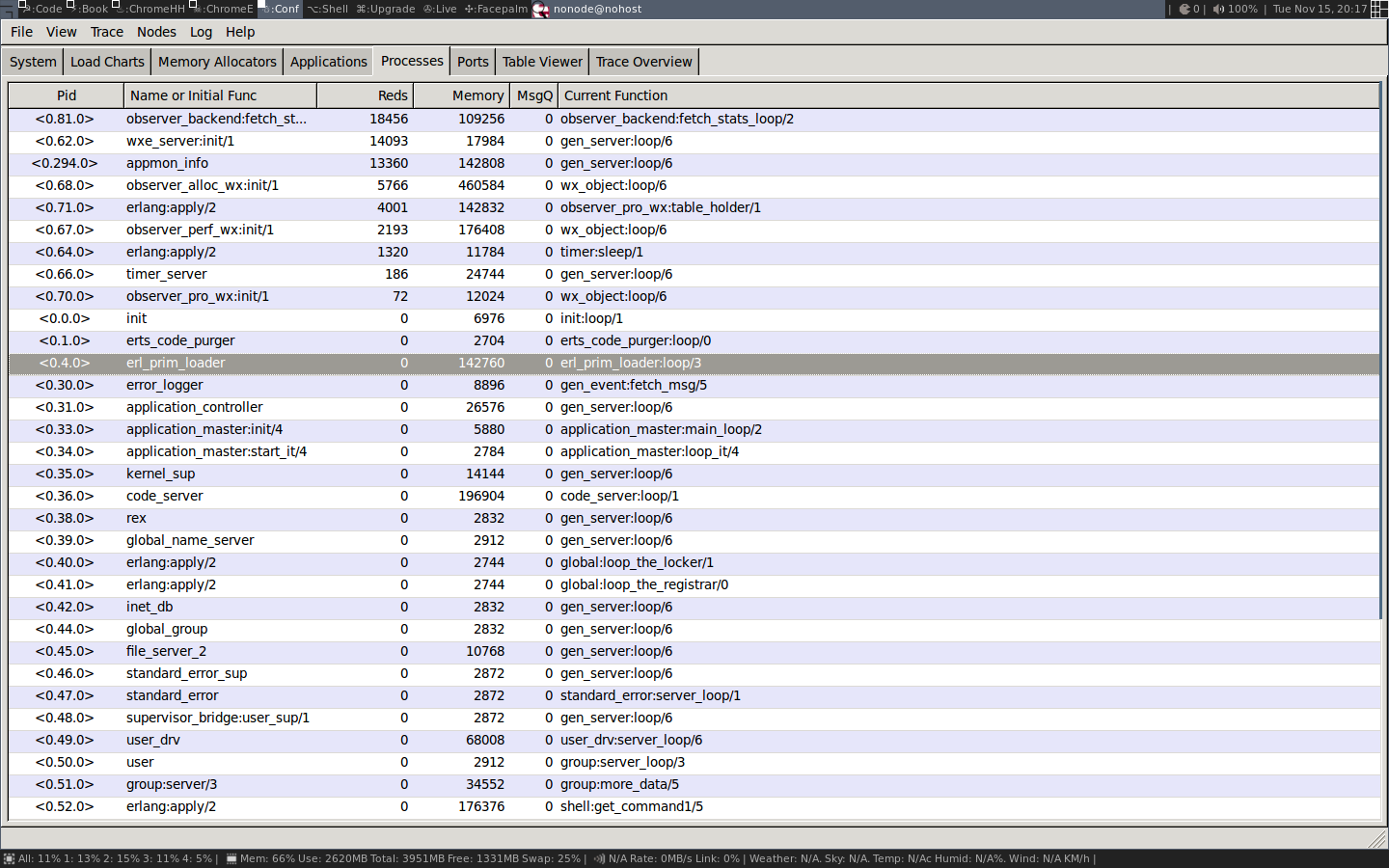

为了得到一些关于进程的有用信息,我们切换到 Processes 选项卡:

在这个视图中,我们得到了与 shell 中的 i/0 基本相同的信息。我们可以看到 pid、注册名称、规约值数量、内存使用量、消息数量和当前函数。

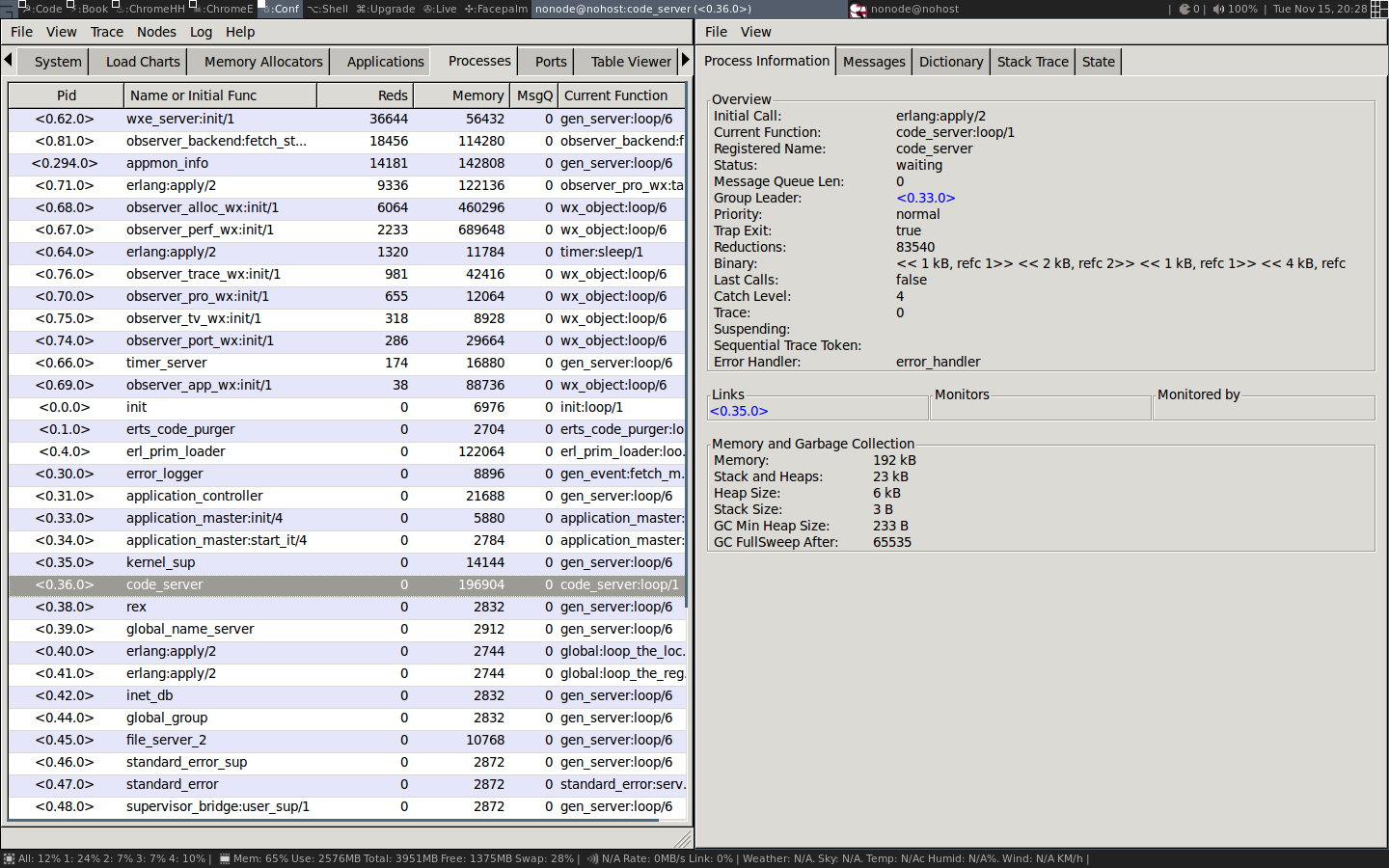

我们也可以通过双击某行的行来查看进程(例如 code server),以获得通过 process_info/2 可以获得的信息:

我们现在不讨论所有这些信息的意义,但如果你继续阅读,所有的信息最终都会被揭示。

既然我们已经基本了解了什么是进程,以及一些用于查找和检查系统中进程的工具,那么我们就可以深入了解进程是如何实现的了。

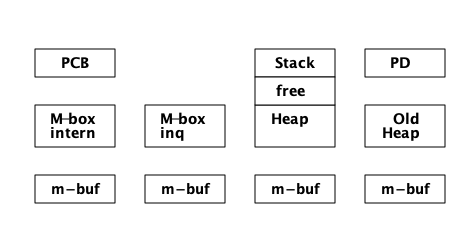

3.2. 进程就是内存

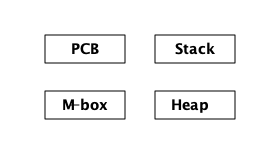

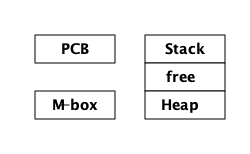

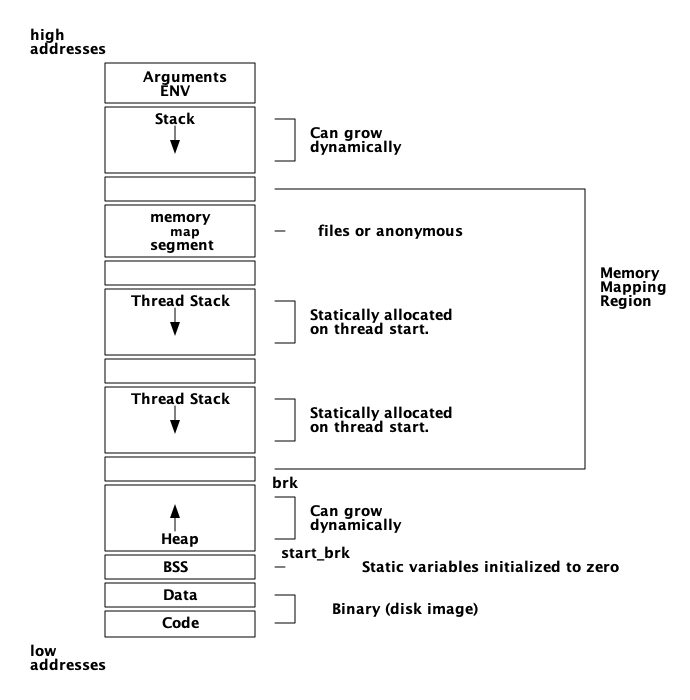

一个进程基本上是四个内存块:一个_stack_,一个_heap_,一个_message区域,和一个进程控制块_ (PCB)。

栈用于通过存储返回地址来跟踪程序执行情况、向函数传递参数,以及保存本地变量。更大的结构,如列表和元组被存储在堆中。

Message area,也称为信箱 ( mailbox ) ,用于存储从其他进程发送给自身进程的消息。进程控制块用于跟踪进程的状态。

如图,以内存视角查看进程:

这幅关于进程的图已经非常简化,我们将对更精细的版本进行多次迭代,以得到更精确的图。

栈、堆和邮箱内存都是动态分配的,可以根据需要扩容或缩容。我们将在后面的章节中看到它是如何工作的。另一方面,PCB 是静态分配的,并且包含许多控制进程的字段。

实际上,我们可以通过使用 HiPE’s Built In Functions (HiPE BIFs) 中的自省来检查其中一些内存区域。有了这些 BIFs,我们可以打印出栈、堆和 PCB 的内存中的内容。原始数据会被打印出来,在大多数情况下,人类可读的版本会与数据一起打印出来。要真正了解检查内存时我们所看到的一切,我们需要知道更多关于 Erlang 标签方案 (将在 Chapter 4 中介绍)、执行模型和错误处理(将在 Chapter 5 中介绍),但是使用这些工具将给我们一个很好的视图来说明,进程其实就是内存。

使用 hipe_bifs:show_estack/1 我们可以看到进程栈的上下文:

1> hipe_bifs:show_estack(self()).

| BEAM STACK |

| Address | Contents |

|--------------------|--------------------| BEAM ACTIVATION RECORD

| 0x00007f9cc3238310 | 0x00007f9cc2ea6fe8 | BEAM PC shell:exprs/7 + 0x4e

| 0x00007f9cc3238318 | 0xfffffffffffffffb | []

| 0x00007f9cc3238320 | 0x000000000000644b | none

|--------------------|--------------------| BEAM ACTIVATION RECORD

| 0x00007f9cc3238328 | 0x00007f9cc2ea6708 | BEAM PC shell:eval_exprs/7 + 0xf

| 0x00007f9cc3238330 | 0xfffffffffffffffb | []

| 0x00007f9cc3238338 | 0xfffffffffffffffb | []

| 0x00007f9cc3238340 | 0x000000000004f3cb | cmd

| 0x00007f9cc3238348 | 0xfffffffffffffffb | []

| 0x00007f9cc3238350 | 0x00007f9cc3237102 | {value,#Fun<shell.5.104321512>}

| 0x00007f9cc3238358 | 0x00007f9cc323711a | {eval,#Fun<shell.21.104321512>}

| 0x00007f9cc3238360 | 0x00000000000200ff | 8207

| 0x00007f9cc3238368 | 0xfffffffffffffffb | []

| 0x00007f9cc3238370 | 0xfffffffffffffffb | []

| 0x00007f9cc3238378 | 0xfffffffffffffffb | []

|--------------------|--------------------| BEAM ACTIVATION RECORD

| 0x00007f9cc3238380 | 0x00007f9cc2ea6300 | BEAM PC shell:eval_loop/3 + 0x47

| 0x00007f9cc3238388 | 0xfffffffffffffffb | []

| 0x00007f9cc3238390 | 0xfffffffffffffffb | []

| 0x00007f9cc3238398 | 0xfffffffffffffffb | []

| 0x00007f9cc32383a0 | 0xfffffffffffffffb | []

| 0x00007f9cc32383a8 | 0x000001a000000343 | <0.52.0>

|....................|....................| BEAM CATCH FRAME

| 0x00007f9cc32383b0 | 0x0000000000005a9b | CATCH 0x00007f9cc2ea67d8

| | | (BEAM shell:eval_exprs/7 + 0x29)

|********************|********************|

|--------------------|--------------------| BEAM ACTIVATION RECORD

| 0x00007f9cc32383b8 | 0x000000000093aeb8 | BEAM PC normal-process-exit

| 0x00007f9cc32383c0 | 0x00000000000200ff | 8207

| 0x00007f9cc32383c8 | 0x000001a000000343 | <0.52.0>

|--------------------|--------------------|

true

2>我们将 Chapter 4 中进一步研究的栈和堆中的值。堆的内容由 hipe_bifs:show_heap/1 打印。我们不想在这里列出一个大的堆,所以我们将生成一个不做任何事情的新进程并显示它的堆:

2> hipe_bifs:show_heap(spawn(fun () -> ok end)).

From: 0x00007f7f33ec9588 to 0x00007f7f33ec9848

| H E A P |

| Address | Contents |

|--------------------|--------------------|

| 0x00007f7f33ec9588 | 0x00007f7f33ec959a | #Fun<erl_eval.20.52032458>

| 0x00007f7f33ec9590 | 0x00007f7f33ec9839 | [[]]

| 0x00007f7f33ec9598 | 0x0000000000000154 | Thing Arity(5) Tag(20)

| 0x00007f7f33ec95a0 | 0x00007f7f3d3833d0 | THING

| 0x00007f7f33ec95a8 | 0x0000000000000000 | THING

| 0x00007f7f33ec95b0 | 0x0000000000600324 | THING

| 0x00007f7f33ec95b8 | 0x0000000000000000 | THING

| 0x00007f7f33ec95c0 | 0x0000000000000001 | THING

| 0x00007f7f33ec95c8 | 0x000001d0000003a3 | <0.58.0>

| 0x00007f7f33ec95d0 | 0x00007f7f33ec95da | {[],{eval...

| 0x00007f7f33ec95d8 | 0x0000000000000100 | Arity(4)

| 0x00007f7f33ec95e0 | 0xfffffffffffffffb | []

| 0x00007f7f33ec95e8 | 0x00007f7f33ec9602 | {eval,#Fun<shell.21.104321512>}

| 0x00007f7f33ec95f0 | 0x00007f7f33ec961a | {value,#Fun<shell.5.104321512>}...

| 0x00007f7f33ec95f8 | 0x00007f7f33ec9631 | [{clause...

...

| 0x00007f7f33ec97d0 | 0x00007f7f33ec97fa | #Fun<shell.5.104321512>

| 0x00007f7f33ec97d8 | 0x00000000000000c0 | Arity(3)

| 0x00007f7f33ec97e0 | 0x0000000000000e4b | atom

| 0x00007f7f33ec97e8 | 0x000000000000001f | 1

| 0x00007f7f33ec97f0 | 0x0000000000006d0b | ok

| 0x00007f7f33ec97f8 | 0x0000000000000154 | Thing Arity(5) Tag(20)

| 0x00007f7f33ec9800 | 0x00007f7f33bde0c8 | THING

| 0x00007f7f33ec9808 | 0x00007f7f33ec9780 | THING

| 0x00007f7f33ec9810 | 0x000000000060030c | THING

| 0x00007f7f33ec9818 | 0x0000000000000002 | THING

| 0x00007f7f33ec9820 | 0x0000000000000001 | THING

| 0x00007f7f33ec9828 | 0x000001d0000003a3 | <0.58.0>

| 0x00007f7f33ec9830 | 0x000001a000000343 | <0.52.0>

| 0x00007f7f33ec9838 | 0xfffffffffffffffb | []

| 0x00007f7f33ec9840 | 0xfffffffffffffffb | []

|--------------------|--------------------|

true

3>我们也可以通过 hipe_bifs:show_pcb/1 来打印 PCB 中的字段:

3> hipe_bifs:show_pcb(self()).

P: 0x00007f7f3cbc0400

---------------------------------------------------------------

Offset| Name | Value | *Value |

0 | id | 0x000001d0000003a3 | |

72 | htop | 0x00007f7f33f15298 | |

96 | hend | 0x00007f7f33f16540 | |

88 | heap | 0x00007f7f33f11470 | |

104 | heap_sz | 0x0000000000000a1a | |

80 | stop | 0x00007f7f33f16480 | |

592 | gen_gcs | 0x0000000000000012 | |

594 | max_gen_gcs | 0x000000000000ffff | |

552 | high_water | 0x00007f7f33f11c50 | |

560 | old_hend | 0x00007f7f33e90648 | |

568 | old_htop | 0x00007f7f33e8f8e8 | |

576 | old_head | 0x00007f7f33e8e770 | |

112 | min_heap_.. | 0x00000000000000e9 | |

328 | rcount | 0x0000000000000000 | |

336 | reds | 0x0000000000002270 | |

16 | tracer | 0xfffffffffffffffb | |

24 | trace_fla.. | 0x0000000000000000 | |

344 | group_lea.. | 0x0000019800000333 | |

352 | flags | 0x0000000000002000 | |

360 | fvalue | 0xfffffffffffffffb | |

368 | freason | 0x0000000000000000 | |

320 | fcalls | 0x00000000000005a2 | |

384 | next | 0x0000000000000000 | |

48 | reg | 0x0000000000000000 | |

56 | nlinks | 0x00007f7f3cbc0750 | |

616 | mbuf | 0x0000000000000000 | |

640 | mbuf_sz | 0x0000000000000000 | |

464 | dictionary | 0x0000000000000000 | |

472 | seq..clock | 0x0000000000000000 | |

480 | seq..astcnt | 0x0000000000000000 | |

488 | seq..token | 0xfffffffffffffffb | |

496 | intial[0] | 0x000000000000320b | |

504 | intial[1] | 0x0000000000000c8b | |

512 | intial[2] | 0x0000000000000002 | |

520 | current | 0x00007f7f3be87c20 | 0x000000000000ed8b |

296 | cp | 0x00007f7f3d3a5100 | 0x0000000000440848 |

304 | i | 0x00007f7f3be87c38 | 0x000000000044353a |

312 | catches | 0x0000000000000001 | |

224 | arity | 0x0000000000000000 | |

232 | arg_reg | 0x00007f7f3cbc04f8 | 0x000000000000320b |

240 | max_arg_reg | 0x0000000000000006 | |

248 | def..reg[0] | 0x000000000000320b | |

256 | def..reg[1] | 0x0000000000000c8b | |

264 | def..reg[2] | 0x00007f7f33ec9589 | |

272 | def..reg[3] | 0x0000000000000000 | |

280 | def..reg[4] | 0x0000000000000000 | |

288 | def..reg[5] | 0x00000000000007d0 | |

136 | nsp | 0x0000000000000000 | |

144 | nstack | 0x0000000000000000 | |

152 | nstend | 0x0000000000000000 | |

160 | ncallee | 0x0000000000000000 | |

56 | ncsp | 0x0000000000000000 | |

64 | narity | 0x0000000000000000 | |

---------------------------------------------------------------

true

4>现在有了这些检查工具的支持,我们准备看看这些领域的PCB意味着什么。

3.3. 进程控制块(PCB)

进程控制块包含控制进程行为和当前状态的所有字段。在本节和本章的其余部分,我们将介绍最重要的字段。我们将在本章中省略与执行和跟踪有关的一些字段,而在 Chapter 5 中讨论那些字段。

如果你想比我们在本章中介绍的内容了解的更深入,你可以看看PCB 的 C 源代码。PCB在文件 link: ' erl_process.h ' 中被实现为一个名为 process 的 C 结构体。

`id` 包含进程的 ID (或 PID)。

0 | id | 0x000001d0000003a3 | |

进程 ID 是一个 Erlang 项式,因此会有 tag (参见 Chapter 4 )。这意味着4个最低有效位是一个标签 (tag, 0011)。在代码部分,有一个检查 Erlang 项式的模块(请参阅 show.erl ),我们将在关于类型的一章中介绍它。不过,我们现在可以使用它来检查加了标签的项式的类型。

4> show:tag_to_type(16#0000001d0000003a3).

pid

5>字段 htop 和 stop 分别是指向堆和栈顶部的指针,也就是说,它们指向堆或栈的下一个空闲槽。字段 heap (start) 和 hend 指向整个堆的开始和结束, heap_sz 用单词表示堆的大小。在64位机器上 hend - heap = heap_sz * 8 ,在32位机器上 hend - heap = heap_sz * 4 。

字段 min_heap_size 是堆开始时的大小,它不会缩小到小于这个值,默认值是 233。

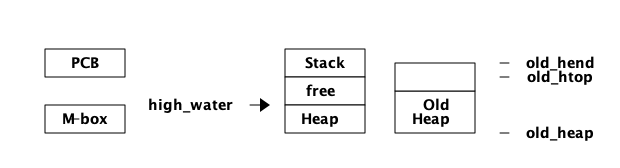

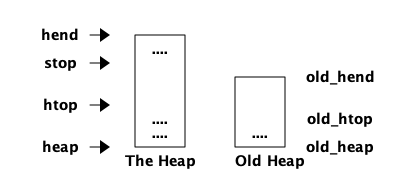

我们现在可以用 PCB 控制堆的形状的字段来精炼进程堆的图片:

但是,等一下,为什么我们有堆开始和堆结束,但没有栈的开始和结束呢?这是因为 BEAM 使用了一种通过同时分配堆和堆栈来节省空间和指针的技巧。现在,我们第一次修正脑海里进程的内存图像。堆和栈实际上在同一个内存区域:

栈向低内存地址增长,堆向高内存地址增长,所以我们也可以通过添加栈顶指针来优化堆的图片:

当指针 htop 和 stop 相遇,进程将耗尽空闲内存,必须进行垃圾收集来释放内存。

3.4. 垃圾收集器 (GC)

Erlang 使用每个进程复制分代垃圾收集器来管理堆内存。当堆 (或栈,因为它们共享分配的内存块) 上没有更多空间时,垃圾收集器就会开始释放内存。

GC 分配一个名为 to space 的新内存区域。然后,它遍历栈以找到所有活动根,并跟踪每个根,将堆上的数据复制到新堆。最后,它还将栈复制到新堆并释放旧的内存区域。

GC 是由 PCB 中的以下字段控制的:

Eterm *high_water;

Eterm *old_hend; /* Heap pointers for generational GC. */

Eterm *old_htop;

Eterm *old_heap;

Uint max_heap_size; /* Maximum size of heap (in words). */

Uint16 gen_gcs; /* Number of (minor) generational GCs. */

Uint16 max_gen_gcs; /* Max minor gen GCs before fullsweep. */由于垃圾收集器是分代的,所以大多数时候它将使用启发式方法来查看新数据。也就是说,在所谓的 minor collection 中,GC 只查看栈的顶部并将新数据移动到新堆中。旧数据,即在堆上的 high_water 标记 (见下图) 以下分配的数据,被移动到一个称为旧堆(old heap)的特殊区域。

大多数时候,每个进程都有另一个堆区域:旧堆,由PCB中的字段 old_heap、 old_htop 以及 old_hend 处理。这几乎把我们带回了原来的进程图,即四个内存区域:

当一个进程启动时是没有旧堆的,但是一旦年轻数据成熟为旧数据,并且存在垃圾收集,就会分配旧堆。当有 major collection (也称为 full sweep) 时,旧堆被垃圾收集。请参阅 Chapter 12 了解垃圾收集如何工作的更多细节。在那一章中,我们还将看到如何跟踪和修复与内存相关的问题。

3.5. 信箱(Mailbox)和消息传递

进程通信通过消息传递完成。进程发送被实现,以便发送进程将消息从自己的堆复制到接收进程的邮箱。

在 Erlang 的 早期,并发是通过调度器中的多任务来实现的。我们将在本章后面的调度器一节中更多地讨论并发性,现在值得注意的是,在 Erlang 的第一版中没有并行性,那时一次只能同时运行一个进程。在那个版本中,发送进程可以直接在接收进程的堆上写入数据。

3.5.1. 并行发送消息

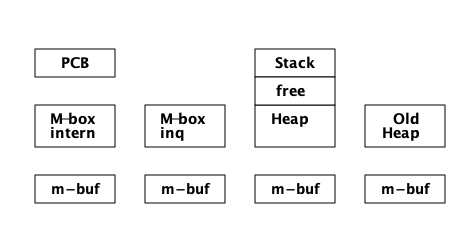

当多核系统被引入,Erlang 实现被扩展为多个调度器来调度多个并行运行的进程时,在不获取接收方的 main lock 的情况下直接写另一个进程的堆就不再安全了。此时引入了 m-bufs 的概念 (也称为“堆片段”, heap fragments)。 m-bufs 是一个在进程堆外的内存区域,其他进程可以安全地写入数据。

如果发送进程不能获得锁,它就可以将消息写入 m-buf 。当消息的所有数据都已复制到 m-buf 时,该消息将通过邮箱链接到进程。链接(LINK_MESSAGE, erl_message.h)将消息追加到接收方的消息队列最后。

垃圾收集器然后将这些消息复制到进程的堆中。为了减少 GC 时的压力,邮箱被分成两个列表,一个包含已看到的消息,另一个包含新消息。GC 不必查看任何新消息,因为我们知道它们将在 GC 中存活下来(它们仍然在邮箱中),这样我们可以避免一些复制。

3.6. 无锁消息传递

在 Erlang 19 中引入了一个新的可以每个进程分别设置的 message_queue_data ,它可以取 on_heap 或 off_heap 的值。当设置为 on_heap 时,发送进程将首先尝试获取接收方的 main lock ,如果成功,则消息将直接复制到接收方的堆上。以上场景只有在接收方被挂起并且没有其他进程获取该锁以发送给同一进程时才发生。如果发送方不能获得锁,它将分配一个堆片段并将消息复制到那里。

如果标志设置为 off_heap ,发送方将不会尝试获得锁,而是直接写入堆片段。这将减少锁争用,但是分配一个堆片段比直接写入已经分配的进程堆的开销更大,而且会导致更大的内存使用。可能进程已经分配了一个大的空堆,但发送者依然会将新消息写入新的堆片段。

使用 on_heap 方式,所有消息,包括直接分配在堆上的消息和堆碎片中的消息,都是被 GC 复制的。如果消息队列很大,许多消息没有处理,因此仍然是活动的,它们将被提升到旧堆,进程堆的大小将增加,从而导致更高的内存使用量。

当消息被复制到接收进程时,所有消息都被添加到一个链表 ( mailbox ) 中。如果消息被复制到接收进程的堆中,该消息将链接到 “内部消息队列” ( internal message queue ,或 seen 消息) 并由 GC 检查。在 off_heap 分配方案中,新消息被放置在 “外部” ( external ) message in queue 中,并被 GC 忽略。

3.6.1. 消息的内存区域

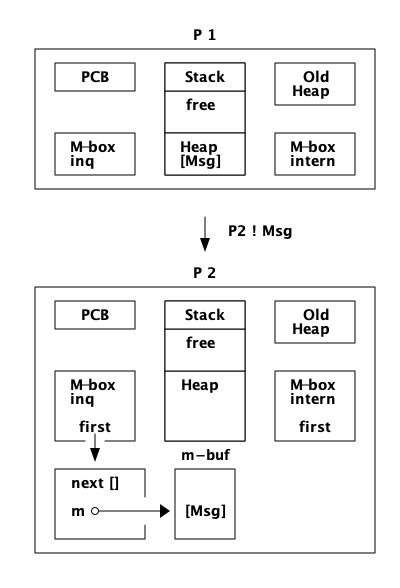

现在,我们可以再次将进程描述为四个内存区域的看法组一个修正了。现在每个进程由五个内存区域 ( heap ,stack, PCB , internal mailbox, 和 external mailbox ) 和不同数量的堆碎片 ( m-bufs )组成:

每个邮箱都包含长度和两个指针信息, internal queue 的信息存储在字段 msg.len, msg.first, msg.last 中。用于内部队列和msg_inq,external in queue 的信息存储在 msg_inq.len, msg_inq.first, 以及 msg_inq.last 中。还有一个指针指向下一个要查看的消息( msg.save ),以实现选择性接收。

3.6.2. 检查消息处理

让我们使用自省工具来更详细地了解它是如何工作的。我们首先在邮箱中设置一个带有消息的进程,然后查看PCB。

4> P = spawn(fun() -> receive stop -> ok end end).

<0.63.0>

5> P ! start.

start

6> hipe_bifs:show_pcb(P).

...

408 | msg.first | 0x00007fd40962d880 | |

416 | msg.last | 0x00007fd40962d880 | |

424 | msg.save | 0x00007fd40962d880 | |

432 | msg.len | 0x0000000000000001 | |

696 | msg_inq.first | 0x0000000000000000 | |

704 | msg_inq.last | 0x00007fd40a306238 | |

712 | msg_inq.len | 0x0000000000000000 | |

616 | mbuf | 0x0000000000000000 | |

640 | mbuf_sz | 0x0000000000000000 | |

...从这里我们可以看到消息队列中有一条消息, first, last 和 save 指针都指向该消息。

如前所述,可以通过设置标志 message_queue_data 来强制消息进入 in queue 队列。我们可以用以下程序来尝试:

-module(msg).

-export([send_on_heap/0

,send_off_heap/0]).

send_on_heap() -> send(on_heap).

send_off_heap() -> send(off_heap).

send(How) ->

%% Spawn a function that loops for a while

P2 = spawn(fun () -> receiver(How) end),

%% spawn a sending process

P1 = spawn(fun () -> sender(P2) end),

P1.

sender(P2) ->

%% Send a message that ends up on the heap

%% {_,S} = erlang:process_info(P2, heap_size),

M = loop(0),

P2 ! self(),

receive ready -> ok end,

P2 ! M,

%% Print the PCB of P2

hipe_bifs:show_pcb(P2),

ok.

receiver(How) ->

erlang:process_flag(message_queue_data,How),

receive P -> P ! ready end,

%% loop(100000),

receive x -> ok end,

P.

loop(0) -> [done];

loop(N) -> [loop(N-1)].有了这个程序,我们可以试着使用 on_heap 或 off_heap 模式发送消息,并在每次发送后查看 PCB。使用 on_heap 模式,我们得到了与之前的消息发送相同的结果:

5> msg:send_on_heap().

...

408 | msg.first | 0x00007fd4096283c0 | |

416 | msg.last | 0x00007fd4096283c0 | |

424 | msg.save | 0x00007fd40a3c1048 | |

432 | msg.len | 0x0000000000000001 | |

696 | msg_inq.first | 0x0000000000000000 | |

704 | msg_inq.last | 0x00007fd40a3c1168 | |

712 | msg_inq.len | 0x0000000000000000 | |

616 | mbuf | 0x0000000000000000 | |

640 | mbuf_sz | 0x0000000000000000 | |

...如果我们尝试发送到一个设置为 off_heap 标志的进程,消息会落在 in queue 队列中:

6> msg:send_off_heap().

...

408 | msg.first | 0x0000000000000000 | |

416 | msg.last | 0x00007fd40a3c0618 | |

424 | msg.save | 0x00007fd40a3c0618 | |

432 | msg.len | 0x0000000000000000 | |

696 | msg_inq.first | 0x00007fd3b19f1830 | |

704 | msg_inq.last | 0x00007fd3b19f1830 | |

712 | msg_inq.len | 0x0000000000000001 | |

616 | mbuf | 0x0000000000000000 | |

640 | mbuf_sz | 0x0000000000000000 | |

...3.6.3. 向进程发送消息的过程

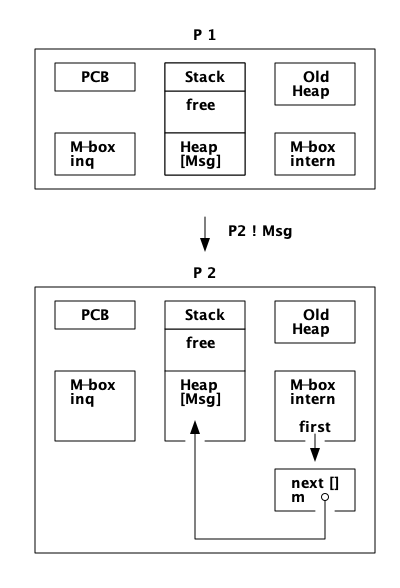

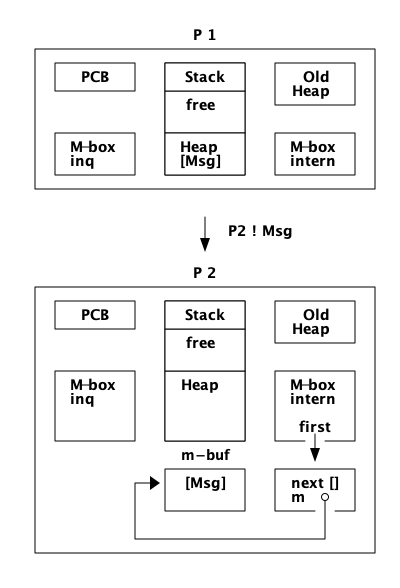

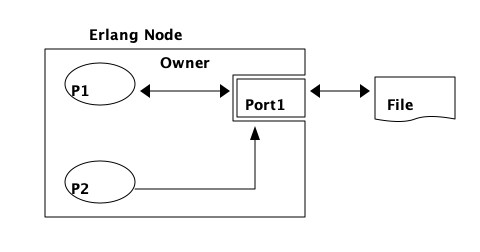

现在我们将忽略分布情况,也就是说我们不会考虑Erlang节点之间发送的消息。想象两个过程 P1 和 P2。进程 P1 想向进程 `P2`发送一条消息(Msg),如图所示:

进程 P1 将执行以下步骤:

-

计算 Msg 的大小。

-

为消息分配空间(如前所述,在

P2的堆上或堆外)。 -

将 Msg 从

P1的堆复制到分配的空间。 -

分配并填充一个 ErlMessage 结构体来包装消息。

-

将 ErlMessage 链接到 ErlMsgQueue 或 ErlMsgInQueue。

如果进程 P2 被挂起,没有其他进程尝试向 P2 发送消息,并且堆上有空间,分配策略为 on_heap,那么消息将直接在堆上写入:

如果 P1 不能获得 P2 的 main lock,或者 P2 的堆空间不够,分配策略为 on_heap,那么消息将写入 m-buf,但链接到内部邮箱:

在一次GC之后,消息将被移动到堆中。

如果分配策略是 off_heap,消息将以 m-buf 结束,并链接到外部邮箱:

在一次 GC 之后,消息仍然在 m-buf 中。直到接收到该消息并从堆上的其他对象或从栈可访问该消息,该消息才会在 GC 期间被复制到进程堆中。

3.6.4. 消息接收

Erlang 支持选择性接收,这意味着不匹配的消息可以留在邮箱中等待以后收取。如果消息不匹配,即使信箱中有消息的时候,进程也可能是挂起的。 msg.save 字段包含一个指向下一条要查看的消息的指针。

在后面的章节中,我们将详细介绍 m-bufs 以及垃圾收集器如何处理邮箱。在后面的章节中,我们还将详细介绍如何在 BEAM 中实现消息接收。

3.6.5. 消息传递调优

使用 Erlang 19 中引入的新 message_queue_data 标志,您可以以一种新的方式用内存空间来 ”交换“ 执行时间。如果接收进程已经过载并一直持有 main lock,那么使用 off_heap 分配可能是一个好策略,这种策略能让发送进程快速地将消息转储到 m-buf 中。

如果两个进程有一个良好平衡的生产者消费者行为,其中没有真正争夺进程锁,那么直接在接收者堆上分配会更快,并且会使用更少的内存。

如果接收方已经过载,且不断接受消息,处理消息的速度慢与接受新消息的速度,那么它实际上可能会开始使用更多的内存,因为消息被不断复制到堆中,并迁移到旧堆中。由于未读消息被认为是活动的,因此堆将不断增长并使用更多内存。

为了找出哪种分配策略最适合你的系统,你需要对它进行基准测试和行为度量。要做的第一个也是最简单的测试可能是在系统开始时更改默认的分配策略。ERTS 的 hmqd 标志将默认策略设置为 off_heap 或 on_heap。如果启动Erlang 时没有更改此标志,则默认为 on_heap。通过设置基准,让 Erlang 以 +hmqd off_heap 方式启动,您可以测试如果所有进程都使用非堆分配,系统的表现是更好还是更差。然后,您可能希望找到瓶颈进程,并通过配置切换分配策略来只测试这些进程。

3.7. 进程字典(PD)

实际上,进程中还有一个可以存储 Erlang 项式的内存区域,即 Process Dictionary。

Process Dictionary (PD) 是一个进程的本地键值存储。这样做的一个优点是,所有的键和值都存储在堆中,不需要像 send 或 ETS 表那样进行复制。

我们现在可以用另一个内存区域 - PD,进程字典,来更新我们对进程观点:

对于 PD 这么小的数组,在长度增长之前,你肯定会遇到一些碰撞。每个哈希值指向一个具有键值对的 bucket。bucket 实际上是堆上的 Erlang list。list 中的每个条目都是同样存储在堆中的二元元组({key, Value})。

在PD中放置一个元素并不是完全自由的,它会导致一个额外的元组和一个缺点,并可能导致垃圾收集被触发。更新位于 bucket 中的 dictionary 中的 key,会导致整个bucket (整个列表) 被重新分配,以确保我们不会获得从旧堆指向新堆的指针。(在 Chapter 12 中,我们将看到垃圾收集如何工作的细节。)

3.8. 深入

在本章中,我们已经了解了流程是如何实现的。特别地,我们查看了进程的内存是如何组织的,消息是如何传递的,以及PCB中的信息。我们还介绍了一些用于检查进程自检的工具,如 erlang:process_info 和 hipe:show*_bifs。

使用函数 erlang:processes/0 和 erlang:process_info/1,2 检查系统中的进程。以下是一些可以尝试的功能:

1> Ps = erlang:processes().

[<0.0.0>,<0.3.0>,<0.6.0>,<0.7.0>,<0.9.0>,<0.10.0>,<0.11.0>,

<0.12.0>,<0.13.0>,<0.14.0>,<0.15.0>,<0.16.0>,<0.17.0>,

<0.19.0>,<0.20.0>,<0.21.0>,<0.22.0>,<0.23.0>,<0.24.0>,

<0.25.0>,<0.26.0>,<0.27.0>,<0.28.0>,<0.29.0>,<0.33.0>]

2> P = self().

<0.33.0>

3> erlang:process_info(P).

[{current_function,{erl_eval,do_apply,6}},

{initial_call,{erlang,apply,2}},

{status,running},

{message_queue_len,0},

{messages,[]},

{links,[<0.27.0>]},

{dictionary,[]},

{trap_exit,false},

{error_handler,error_handler},

{priority,normal},

{group_leader,<0.26.0>},

{total_heap_size,17730},

{heap_size,6772},

{stack_size,24},

{reductions,25944},

{garbage_collection,[{min_bin_vheap_size,46422},

{min_heap_size,233},

{fullsweep_after,65535},

{minor_gcs,1}]},

{suspending,[]}]

4> lists:keysort(2,[{P,element(2,erlang:process_info(P,

total_heap_size))} || P <- Ps]).

[{<0.10.0>,233},

{<0.13.0>,233},

{<0.14.0>,233},

{<0.15.0>,233},

{<0.16.0>,233},

{<0.17.0>,233},

{<0.19.0>,233},

{<0.20.0>,233},

{<0.21.0>,233},

{<0.22.0>,233},

{<0.23.0>,233},

{<0.25.0>,233},

{<0.28.0>,233},

{<0.29.0>,233},

{<0.6.0>,752},

{<0.9.0>,752},

{<0.11.0>,1363},

{<0.7.0>,1597},

{<0.0.0>,1974},

{<0.24.0>,2585},

{<0.26.0>,6771},

{<0.12.0>,13544},

{<0.33.0>,13544},

{<0.3.0>,15143},

{<0.27.0>,32875}]

9>4. Erlang 类型系统和标签

要理解 ERTS 最重要的方面之一,是 ERTS 如何存储数据,即 Erlang 项式如何存储在内存中。这为你理解垃圾收集如何工作、消息传递如何工作提供了基础,并使你了解需要多少内存。

在本章中,您将学习Erlang 的基本数据类型以及如何在ERTS中实现它们。这些知识对于理解内存分配和垃圾收集这一章非常重要,请参阅Chapter 12。

4.1. Erlang 类型系统

Erlang 是强类型( strong typed )语言。也就是说,无法将一种类型强制转换 ( coerce ) 为另一种类型,只能从一种类型转换 ( convert ) 为另一种类型。与 C语言 比较,在 C 语言中,你可以强制一个 char 转换为一个 int,或任何类型的指针指向 ( void * )。

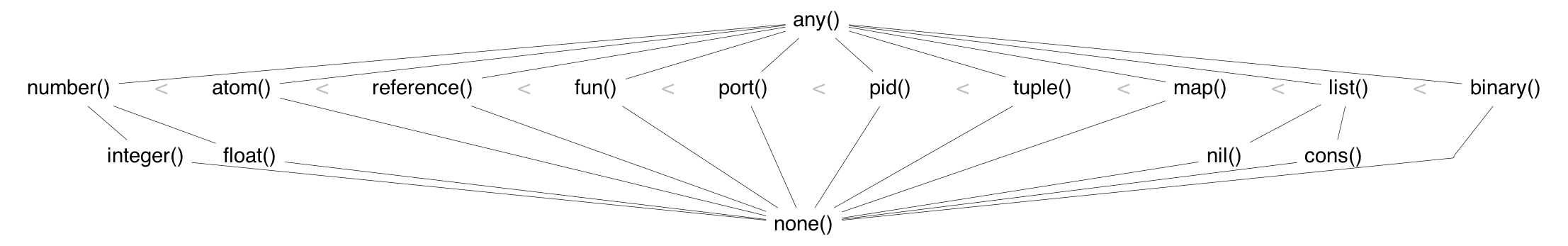

Erlang 类型格( lattice )是非常扁平的,只有很少的子类型,number有 整数( integer ) 和浮点( float )子类型,list有 nil(空表) 和 cons(列表单元,译注:源自list constructor) 子类型 (也可以认为每个大小的元组都有一个子类型)。

Erlang类型格

Erlang 中的所有项都有一个部分顺序 ( < 和 > ),上面型格图中各种类型在是从左到右排序的。

顺序是部分的而不是全部的,因为整数和浮点数在比较之前是要进行转换的。(1 < 1.0) 和 (1.0 < 1) 都是 false,(1 =< 1.0和1 >= 1.0) 和 (1 =/= 1.0) 都是 false。精度较低的数字被转换为精度较高的数字。通常整数被转换为浮点数。对于非常大或非常小的浮点数,如果所有有效数字都在小数点的左边,浮点数就会被转换为整数。

从 Erlang 18 开始,当比较两个 Map 的顺序时,它们的比较如下:如果一个 Map 的元素少于另一个,则认为它更小。否则,按项顺序比较键,即认为所有整数都比所有浮点数小。如果所有的键都是相同的,那么每个值对 (按键的顺序) 将进行算术比较,即首先将它们转换为相同的精度。

当比较相等时也是如此,因此 #{1 => 1.0}== #{1 => 1},但是 #{1.0 => 1}/= #{1 => 1}。

在 Erlang 18 之前的版本,key 的比较也是算术比较。

Erlang 是动态类型的。也就是说,将在运行时检查类型,如果发生类型错误,则抛出异常。编译器不会在编译时检查类型,这与 C 或 Java 等静态类型语言不同,在这些语言中,编译时可能会出现类型错误。

Erlang 类型系统的这些方面是强动态类型,类型上有一个顺序,这给语言的实现带来了一些约束。为了能够在运行时检查和比较类型,每个 Erlang 项式都必须携带它的类型。

这可以通过标记这些项式来解决。

4.2. 标签方案

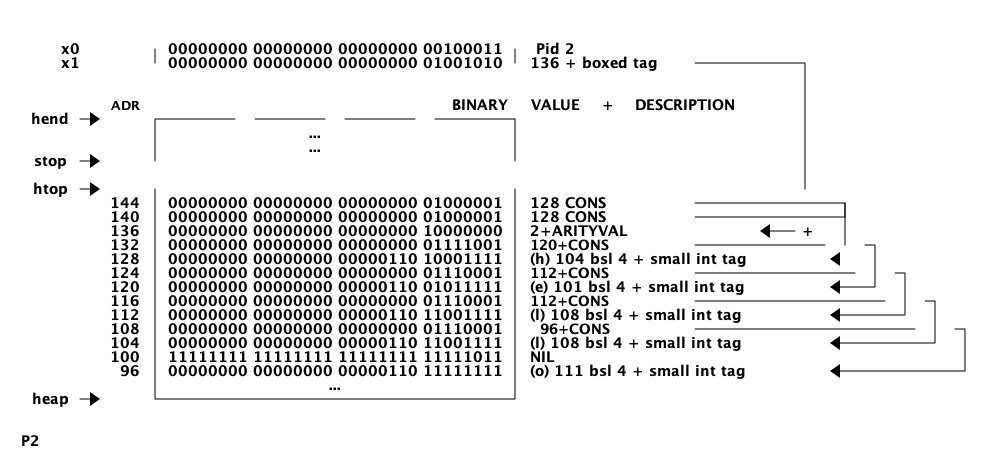

在 Erlang 项式的内存表示中,为类型标记保留一些位。出于性能原因,项式被分为即时 ( immediates ) 和装箱 ( boxed ) 项式。即时项式可以放入一个机器字中,也就是说,它可以放在寄存器(译注:指通用寄存器)或堆栈槽中。装箱项式由两部分组成:标记的指针和存储在进程堆上的若干字长。除列表外,存储在堆中的装箱 ( box ) 项式都有一个头 header 和一个体 body。

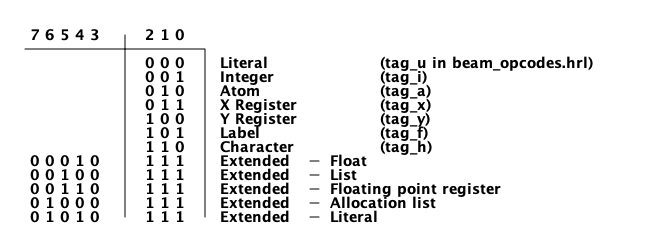

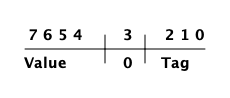

目前ERTS使用分级标签方案,HiPE小组的技术报告解释了该方案背后的历史和原因。(参见 http://www.it.uu.se/research/publications/2000029/) 标签方案的实现见 erl_term.h。

基本思想是使用标签的最低有效位。由于大多数现代CPU体系结构对32位或64位的字长进行对齐,因此至少有两位是指针“未使用的”。这些位可以用作标签。不幸的是,对于 Erlang 中的所有类型,这两个位是不够的,因此需要使用更多的位。(译注:要了解这部分的内容,最好结合 OTP 源码:erl_term.h L: 70 开始阅读 )

4.2.1. 即时类型的标签

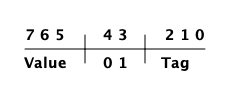

主标签(最低 2 位)被以如下方式使用:

00 Header (on heap) CP (on stack) 01 List (cons,译注:列表项) 10 Boxed 11 Immediate

(译注:以下内容源自 OTP erl_term.h, L:70)

#define _TAG_PRIMARY_SIZE 2

#define _TAG_PRIMARY_MASK 0x3

#define TAG_PRIMARY_HEADER 0x0

#define TAG_PRIMARY_LIST 0x1

#define TAG_PRIMARY_BOXED 0x2

#define TAG_PRIMARY_IMMED1 0x3Header 标记仅用于堆上的项式标签头,稍后将对此进行详细说明。栈上的 00 表示返回地址。列表标记用于 cons 单元格,装箱类型标记用于指向堆的所有其他装箱类型的指针。即时类型标签被进一步划分如下:

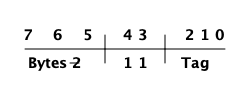

00 11 Pid 01 11 Port 10 11 Immediate 2 11 11 Small integer

(译注:以下内容源自 OTP erl_term.h, L:79)

#define _TAG_IMMED1_SIZE 4

#define _TAG_IMMED1_MASK 0xF

#define _TAG_IMMED1_PID ((0x0 << _TAG_PRIMARY_SIZE) | TAG_PRIMARY_IMMED1)

#define _TAG_IMMED1_PORT ((0x1 << _TAG_PRIMARY_SIZE) | TAG_PRIMARY_IMMED1)

#define _TAG_IMMED1_IMMED2 ((0x2 << _TAG_PRIMARY_SIZE) | TAG_PRIMARY_IMMED1)

#define _TAG_IMMED1_SMALL ((0x3 << _TAG_PRIMARY_SIZE) | TAG_PRIMARY_IMMED1)Pid 和 port 是即时类型的,可以比较有效的比较大小。它们实际上只是引用,pid 是一个进程标识符,它指向一个进程。该进程不驻留在任何进程的堆中,而是由PCB处理。port 的工作方式也大致相同。

在 ERTS 中有两种类型的整数:小整数和大整数。小整数使用一个机器字减去四个标签位,即在 32位机和 64 位机上分别对应 28 位或 60 位。另一方面,大整数可以根据需要大小扩展 ( 仅受堆空间大小的限制 ),并作为装箱对象存储在堆中。

小整数的所有 4 个标记位为 1,仿真器可以在进行整数运算时进行有效的测试,以查看两个参数是否都是即时类型的。 (is_both_small(x,y) 被定义为 (x & y & 1111) == 1111).

Immediate 2 的标签被进一步划分如下:

00 10 11 Atom 01 10 11 Catch 10 10 11 [UNUSED] 11 10 11 Nil

(译注:以下内容源自 OTP erl_term.h, L:86)

#define _TAG_IMMED2_SIZE 6

#define _TAG_IMMED2_MASK 0x3F

#define _TAG_IMMED2_ATOM ((0x0 << _TAG_IMMED1_SIZE) | _TAG_IMMED1_IMMED2)

#define _TAG_IMMED2_CATCH ((0x1 << _TAG_IMMED1_SIZE) | _TAG_IMMED1_IMMED2)

#define _TAG_IMMED2_NIL ((0x3 << _TAG_IMMED1_SIZE) | _TAG_IMMED1_IMMED2)原子由(指向) atom table 表中的索引和 atom 标签组成。要比较两个 atom 即时类型变量是否相等,只要比较两个原子的即时表示就可以。

在 atom table 中,原子被存储为这样的 C 结构体:

typedef struct atom {

IndexSlot slot; /* MUST BE LOCATED AT TOP OF STRUCT!!! */

int len; /* length of atom name */

int ord0; /* ordinal value of first 3 bytes + 7 bits */

byte* name; /* name of atom */

} Atom;由于 len 和 ord0 字段,只要两个原子不以相同的四个字母开头,它们的顺序可以高效地进行比较。

Catch 即时类型只在堆栈上使用。它包含一个间接的指针,指向代码中的接续点(continuation point),在异常发生后执行应该从接续点继续开始。在 Chapter 8 中有更多的内容。

Nil 标记用于空列表( Nil 或 [] )。机器字的其余部分都被 1 填充。

4.2.2. 装箱项式的标签

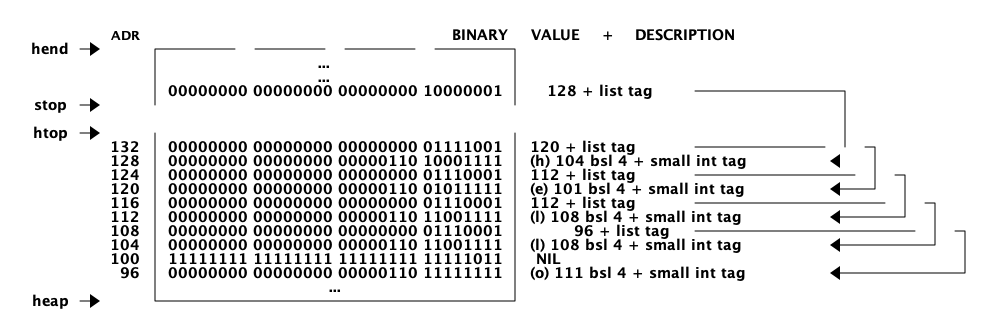

存储在堆上的 Erlang 项式使用几个机器字。列表或 cons 列表项单元只是堆上两个连续的字:头和尾(或者在 lisp 和 ERTS 代码的某些地方称为 car 和 cdr)。

Erlang 中的字符串只是表示字符的整数列表。在 Erlang OTP R14 之前的版本中,字符串被编码为 ISO-latin-1 (ISO8859-1)。自 R14 开始,字符串被编码为 Unicode 代码列表。对于 latin-1 中的字符串,它们和 Unicode 没有区别,因为latin-1是Unicode的子集。

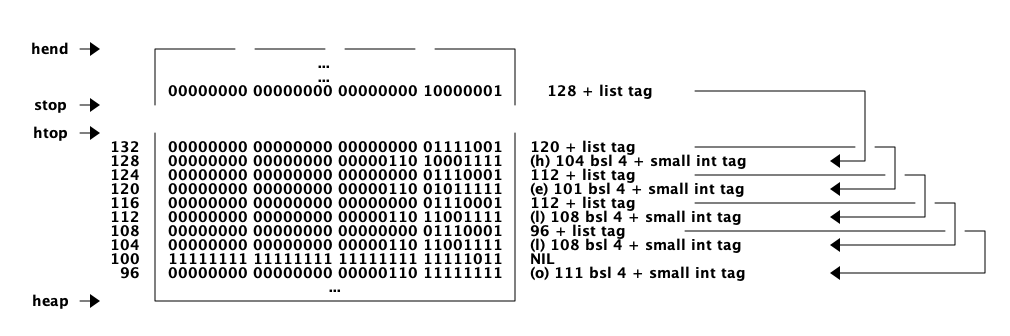

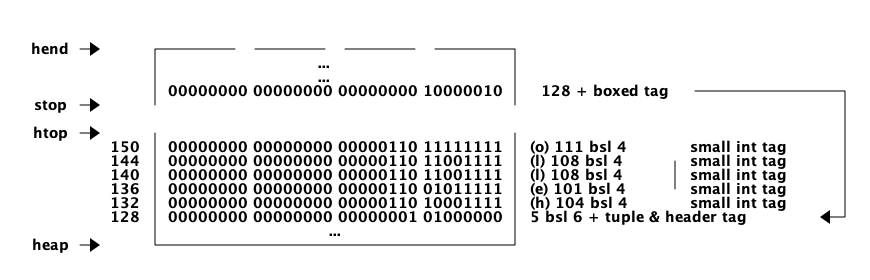

字符串 "hello" 在内存中看起来可能是这样的:

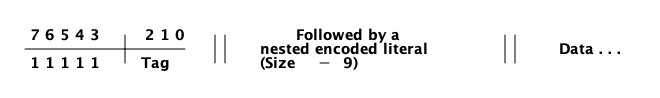

所有其他装箱的项式的主标签都以 Header 00 开头。标头字使用 4 位标头标记和 2 位主标头标记(00),它还具有一个 arity域,用来表示装箱类型的变量使用了多少个字存储。在32位计算机上,它看起来是这样的:aaaaaaaaaaaaaaaaaaaaaatttt00。

标签如下:

0000 ARITYVAL (Tuples) 0001 BINARY_AGGREGATE | 001s BIGNUM with sign bit | 0100 REF | 0101 FUN | THINGS 0110 FLONUM | 0111 EXPORT | 1000 REFC_BINARY | | 1001 HEAP_BINARY | BINARIES | 1010 SUB_BINARY | | 1011 [UNUSED] 1100 EXTERNAL_PID | | 1101 EXTERNAL_PORT | EXTERNAL THINGS | 1110 EXTERNAL_REF | | 1111 MAP

(译注:以下内容源自 OTP erl_term.h, L:92)

/*

* HEADER representation:

*

* aaaaaaaaaaaaaaaaaaaaaaaaaatttt00 arity:26, tag:4

*

* HEADER tags:

*

* 0000 ARITYVAL

* 0001 BINARY_AGGREGATE |

* 001x BIGNUM with sign bit |

* 0100 REF |

* 0101 FUN | THINGS

* 0110 FLONUM |

* 0111 EXPORT |

* 1000 REFC_BINARY | |

* 1001 HEAP_BINARY | BINARIES |

* 1010 SUB_BINARY | |

* 1011 Not used; see comment below

* 1100 EXTERNAL_PID | |

* 1101 EXTERNAL_PORT | EXTERNAL THINGS |

* 1110 EXTERNAL_REF | |

* 1111 MAP

*

* COMMENTS:

*

* - The tag is zero for arityval and non-zero for thing headers.

* - A single bit differentiates between positive and negative bignums.

* - If more tags are needed, the REF and and EXTERNAL_REF tags could probably

* be combined to one tag.

*

* XXX: globally replace XXX_SUBTAG with TAG_HEADER_XXX

*/

#define ARITYVAL_SUBTAG (0x0 << _TAG_PRIMARY_SIZE) /* TUPLE */

#define BIN_MATCHSTATE_SUBTAG (0x1 << _TAG_PRIMARY_SIZE)

#define POS_BIG_SUBTAG (0x2 << _TAG_PRIMARY_SIZE) /* BIG: tags 2&3 */

#define NEG_BIG_SUBTAG (0x3 << _TAG_PRIMARY_SIZE) /* BIG: tags 2&3 */

#define _BIG_SIGN_BIT (0x1 << _TAG_PRIMARY_SIZE)

#define REF_SUBTAG (0x4 << _TAG_PRIMARY_SIZE) /* REF */

#define FUN_SUBTAG (0x5 << _TAG_PRIMARY_SIZE) /* FUN */

#define FLOAT_SUBTAG (0x6 << _TAG_PRIMARY_SIZE) /* FLOAT */

#define EXPORT_SUBTAG (0x7 << _TAG_PRIMARY_SIZE) /* FLOAT */

#define _BINARY_XXX_MASK (0x3 << _TAG_PRIMARY_SIZE)

#define REFC_BINARY_SUBTAG (0x8 << _TAG_PRIMARY_SIZE) /* BINARY */

#define HEAP_BINARY_SUBTAG (0x9 << _TAG_PRIMARY_SIZE) /* BINARY */

#define SUB_BINARY_SUBTAG (0xA << _TAG_PRIMARY_SIZE) /* BINARY */

/* _BINARY_XXX_MASK depends on 0xB being unused */

#define EXTERNAL_PID_SUBTAG (0xC << _TAG_PRIMARY_SIZE) /* EXTERNAL_PID */

#define EXTERNAL_PORT_SUBTAG (0xD << _TAG_PRIMARY_SIZE) /* EXTERNAL_PORT */

#define EXTERNAL_REF_SUBTAG (0xE << _TAG_PRIMARY_SIZE) /* EXTERNAL_REF */

#define MAP_SUBTAG (0xF << _TAG_PRIMARY_SIZE) /* MAP */

#define _TAG_HEADER_ARITYVAL (TAG_PRIMARY_HEADER|ARITYVAL_SUBTAG)

#define _TAG_HEADER_FUN (TAG_PRIMARY_HEADER|FUN_SUBTAG)

#define _TAG_HEADER_POS_BIG (TAG_PRIMARY_HEADER|POS_BIG_SUBTAG)

#define _TAG_HEADER_NEG_BIG (TAG_PRIMARY_HEADER|NEG_BIG_SUBTAG)

#define _TAG_HEADER_FLOAT (TAG_PRIMARY_HEADER|FLOAT_SUBTAG)

#define _TAG_HEADER_EXPORT (TAG_PRIMARY_HEADER|EXPORT_SUBTAG)

#define _TAG_HEADER_REF (TAG_PRIMARY_HEADER|REF_SUBTAG)

#define _TAG_HEADER_REFC_BIN (TAG_PRIMARY_HEADER|REFC_BINARY_SUBTAG)

#define _TAG_HEADER_HEAP_BIN (TAG_PRIMARY_HEADER|HEAP_BINARY_SUBTAG)

#define _TAG_HEADER_SUB_BIN (TAG_PRIMARY_HEADER|SUB_BINARY_SUBTAG)

#define _TAG_HEADER_EXTERNAL_PID (TAG_PRIMARY_HEADER|EXTERNAL_PID_SUBTAG)

#define _TAG_HEADER_EXTERNAL_PORT (TAG_PRIMARY_HEADER|EXTERNAL_PORT_SUBTAG)

#define _TAG_HEADER_EXTERNAL_REF (TAG_PRIMARY_HEADER|EXTERNAL_REF_SUBTAG)

#define _TAG_HEADER_BIN_MATCHSTATE (TAG_PRIMARY_HEADER|BIN_MATCHSTATE_SUBTAG)

#define _TAG_HEADER_MAP (TAG_PRIMARY_HEADER|MAP_SUBTAG)

#define _TAG_HEADER_MASK 0x3F

#define _HEADER_SUBTAG_MASK 0x3C /* 4 bits for subtag */

#define _HEADER_ARITY_OFFS 6

只带有 arity 的 元组类型 被存储在堆中,然后用 arity 下面的字表示每个元素。空的tuple{}与单词0一样存储 ( header 标记00、tuple 标记 0000 和 arity 0)。

binary 是一个不可变的字节数组。 binary 的内部表示有四种类型。 heap binaries 和 refc binaries 这两种类型包含二进制数据。其他两种类型,sub binaries 和 match contexts ( BINARY_AGGREGATE 标签) 子二进制文件和匹配上下文(BINARY_AGGREGATE标记)是对其他两种类型之一的较小引用。

使用 64 字节或更少空间的 binary 可以作为 heap binaries 直接存储在进程堆上。对较大的 binary 来说,它们被引用计数,且有效载荷存储在进程堆之外。对有效载荷的引用存储在进程堆上一个名为 ProcBin 的对象中。

我们将在 Chapter 12 更多地讨论二进制。

如果一个整数不能装入小整数 (字长减 4 位) 空间,它将以 “bignums” (或者叫任意精度整数) 的形式存储在堆中。bignum 在内存中有一个 header,后面跟着许多编码的字。header 中 bignum 标记的符号部分 (s) 对数字的符号进行编码(对于正数,s=0,对于负数,s=1)。

引用是一个“唯一的”( "unique") 项式,通常用于标记消息,以便实现进程邮箱上的通道。引用被实现为 82 位的计数器。在调用 make_ref/0 9671406556917033397649407 次后,计数器将折返并再次以 ref 0 重新开始。在程序生命周期内,你需要一个非常快的机器来执行那么多次 make_ref 调用。重新启动该节点后 (在这种情况下,它也将再次从0开始) 所有旧的本地 refs 都会消失。如果您将 pid 发送到另一个节点,它将成为一个 external ref,见下面描述:

在32位系统上,local ref 在堆上占用 4 个 32 位字长。在 64 位系统上,ref 在堆上占用 3 个 64 位字长。

|00000000 00000000 00000000 11010000| Arity 3 + ref tag

|00000000 000000rr rrrrrrrr rrrrrrrr| Data0

|rrrrrrrr rrrrrrrr rrrrrrrr rrrrrrrr| Data1

|rrrrrrrr rrrrrrrr rrrrrrrr rrrrrrrr| Data2

引用数为: (Data2 bsl 50) + (Data1 bsl 18) + Data0.

5. Erlang 虚拟机: BEAM

BEAM (Bogumil / Björn 抽象机)是在 Erlang 运行时系统中执行代码的机器。它是一台垃圾收集,规约值计数,虚拟,非抢占式,直接线程,寄存器式机器。如果这还不能说明什么,不用担心,在接下来的部分中,我们将介绍这些单词在此上下文中的含义。

虚拟机 BEAM 位于 Erlang 节点的核心。执行 Erlang 代码的是 BEAM。也就是说,是 BEAM 执行您的应用程序代码。理解 BEAM 是如何执行代码的,对于配置和调优您的代码至关重要。

BEAM 的设计对 BEAM 的其他部分有很大的影响。用于调度的原语会影响调度器 ( Chapter 11 ),Erlang 短语的表示以及与内存的交互会影响垃圾收集器 ( Chapter 12 )。通过理解 BEAM 的基本设计,您将更容易理解这些其他组件的实现。

5.1. 工作内存: 堆栈机?并不是!

与它的前身 JAM (Joe 's Abstract Machine) 是一个堆栈机不同,BEAM 是一个基于WAM [warren] 的寄存器机器。在堆栈机器中,指令的每个操作数首先被推入工作堆栈,然后指令弹出它的参数,然后将结果推入堆栈。

堆栈机在虚拟机和编程语言实现者中非常流行,因为它们很容易为其生成代码,而且代码变得非常紧凑。编译器不需要做任何寄存器分配,并且大多数操作不需要任何参数(在指令流中)。

编译表达式 "8 + 17 * 2." 到堆栈机器可以产生如下代码:

push 8 push 17 push 2 multiply add

compile(String) ->

[ParseTree] = element(2,

erl_parse:parse_exprs(

element(2,

erl_scan:string(String)))),

generate_code(ParseTree).

generate_code({op, _Line, '+', Arg1, Arg2}) ->

generate_code(Arg1) ++ generate_code(Arg2) ++ [add];

generate_code({op, _Line, '*', Arg1, Arg2}) ->

generate_code(Arg1) ++ generate_code(Arg2) ++ [multiply];

generate_code({integer, _Line, I}) -> [push, I].和一个更简单的虚拟堆栈机:

interpret(Code) -> interpret(Code, []).

interpret([push, I |Rest], Stack) -> interpret(Rest, [I|Stack]);

interpret([add |Rest], [Arg2, Arg1|Stack]) -> interpret(Rest, [Arg1+Arg2|Stack]);

interpret([multiply|Rest], [Arg2, Arg1|Stack]) -> interpret(Rest, [Arg1*Arg2|Stack]);

interpret([], [Res|_]) -> Res.And a quick test run gives us the answer:

1> stack_machine:interpret(stack_machine:compile("8 + 17 * 2.")).

42很好,您已经构建了您的第一个虚拟机!如何处理减法、除法和 Erlang 语言的其他部分留给读者作为练习。

无论如何,BEAM 不是 一个堆栈机,它是一个寄存器机器。在寄存器中,机器指令操作数存储在寄存器中而不是堆栈中,操作的结果通常在一个特定的寄存器中结束。

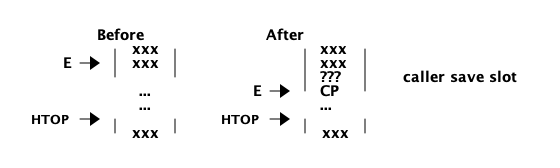

大多数寄存器机器仍然有一个用于向函数传递参数和保存返回地址的栈。BEAM 既有栈也有寄存器,但就像 WAM 一样,堆栈槽只可以通过称为 Y 寄存器(Y-registers)的寄存器访问。BEAM 也有一些 X 寄存器(X-registers)和一个特殊功能寄存器 X0 (有时也称为R0),它作为一个存储结果的累加器。

X 寄存器用作函数调用的参数寄存器,而寄存器 X0 用于存储返回值。

X 寄存器存储在 BEAM 模拟器的 c 数组中,可以从所有函数全局地访问它们。X0 寄存器缓存在一个本地变量中,该变量映射到大多数体系结构中本机上的物理机器寄存器。

Y 寄存器存储在调用方的堆栈框架中,仅供调用函数访问。为了跨函数调用保存一个值,BEAM 在当前栈帧中为它分配一个栈槽,然后将该值移动到Y寄存器。

我们使用 'S' flag 编译以下程序:

-module(add).

-export([add/2]).

add(A,B) -> id(A) + id(B).

id(I) -> I.之后,我们对 add 函数,得到了如下代码:

{function, add, 2, 2}.

{label,1}.

{func_info,{atom,add},{atom,add},2}.

{label,2}.

{allocate,1,2}.

{move,{x,1},{y,0}}.

{call,1,{f,4}}.

{move,{x,0},{x,1}}.

{move,{y,0},{x,0}}.

{move,{x,1},{y,0}}.

{call,1,{f,4}}.

{gc_bif,'+',{f,0},1,[{y,0},{x,0}],{x,0}}.

{deallocate,1}.

return.在这里,我们可以看到代码 (从 label 2 开始) 首先分配了一个栈槽,以获得空间来保存函数调用 id(A) 上的参数 B。然后该值由指令 {move,{x,1},{y,0}} 保存 (读做:将 x1 移动到 y0 或以命令式方式: y0:= x1)。

id 函数(在标签 f4 )然后被 {call,1,{f,4}} 调用。(我们稍后会了解参数 “1” 代表什么) 然后调用的结果(现在在 X0 中) 需要保存在堆栈 (Y0) 上,但是参数 B 保存在 Y0 中,所以 BEAM 做了一点变换:

除 x 和 y 寄存器外,还有一些特殊功能寄存器:

-

Htop - The top of the heap.(堆顶)

-

E - The top of the stack. (栈顶)

-

CP - Continuation Pointer, i.e. function return address (接续点)

-

I - instruction pointer (指令指针)

-

fcalls - reduction counter (规约值计数器)

这些寄存器是 PCB 中相应字段的缓存版本。

{move,{x,0},{x,1}}. % x1 := x0 (id(A))

{move,{y,0},{x,0}}. % x0 := y0 (B)

{move,{x,1},{y,0}}. % y0 := x1 (id(A))

现在我们在 x0 中有了第二个参数 B (第一个参数寄存器),我们可以再次调用 id 函数 {call,1,{f,4}}。

在调用后,x0 包含 id(B),y0 包含 id(A),现在我们可以进行加法操作:{gc_bif,'+',{f,0},1,[{y,0},{x,0}],{x,0}}。(稍后我们将详细讨论 BIF 调用和 GC。)

5.2. 分派(Dispatch):直接线程代码

BEAM 中的指令译码器是用一种被称为直接线程( directly threaded )代码的技术实现的。在这个上下文中,线程 thread 这个词与操作系统线程、并发性或并行性没有任何关系。它是通过虚拟机本身线程化的执行路径。

如果我们看一下上文所示的处理算术表达式的朴素堆栈机,就会发现我们使用 Erlang 原子和模式匹配来解码要执行的指令。这是一个非常重的解码机器指令的机器。在实际机器中,我们将每条指令编码为一个 “机器字” 整数。

我们可以使用 C 语言,将堆栈机重写为 字节码( byte code )机。首先,我们重写编译器,使其产生字节码。这是非常直接的,只需将每条被编码为 atom 的指令替换为表示该指令的字节。为了能够处理大于 255 的整数,我们将整数编码为一个存储大小 ( size ) 的字节,后面接的是用字节编码的整数数值。

compile(Expression, FileName) ->

[ParseTree] = element(2,

erl_parse:parse_exprs(

element(2,

erl_scan:string(Expression)))),

file:write_file(FileName, generate_code(ParseTree) ++ [stop()]).

generate_code({op, _Line, '+', Arg1, Arg2}) ->

generate_code(Arg1) ++ generate_code(Arg2) ++ [add()];

generate_code({op, _Line, '*', Arg1, Arg2}) ->

generate_code(Arg1) ++ generate_code(Arg2) ++ [multiply()];

generate_code({integer, _Line, I}) -> [push(), integer(I)].

stop() -> 0.

add() -> 1.

multiply() -> 2.

push() -> 3.

integer(I) ->

L = binary_to_list(binary:encode_unsigned(I)),

[length(L) | L].现在让我们用 C 语言编写一个简单的虚拟机。完整的代码可以在 Appendix C 中找到。

#define STOP 0

#define ADD 1

#define MUL 2

#define PUSH 3

#define pop() (stack[--sp])

#define push(X) (stack[sp++] = X)

int run(char *code) {

int stack[1000];

int sp = 0, size = 0, val = 0;

char *ip = code;

while (*ip != STOP) {

switch (*ip++) {

case ADD: push(pop() + pop()); break;

case MUL: push(pop() * pop()); break;

case PUSH:

size = *ip++;

val = 0;

while (size--) { val = val * 256 + *ip++; }

push(val);

break;

}

}

return pop();

}你看,用 C 语言写的虚拟机不需要非常复杂。这台机器只是一个循环,通过查看指令指针 ( instruction pointer , ip) 指向的值来检查每条指令的字节码。

对于每个字节码指令,它将通过指令字节码分支跳转,跳到对应指令的 case 上执行指令。这需要对指令进行解码,然后跳转到正确的代码上。如果我们看一下vsm.c (gcc -S vsm.c) 的汇编指令,我们可以看到解码器的内部循环:

L11:

movl -16(%ebp), %eax

movzbl (%eax), %eax

movsbl %al, %eax

addl $1, -16(%ebp)

cmpl $2, %eax

je L7

cmpl $3, %eax

je L8

cmpl $1, %eax

jne L5它必须将字节代码与每个指令代码进行比较,然后执行条件跳转。在一个指令集中有许多指令的真实机器中,这可能会变得相当昂贵。

更好的解决方案是有一个包含代码地址的表,这样我们就可以在表中使用索引来加载地址并跳转,而不需要进行比较。这种技术有时称为 标记线程代码 ( token threaded code )。更进一步,我们可以将实现指令的函数的地址存储在代码内存中。这叫做 子程序线程代码 ( subroutine threaded code )。

这种方法将使在运行时解码更简单,但它使整个VM更加复杂,因为它需要一个加载器。加载程序将字节代码指令替换为实现指令的函数的地址。

一个加载器可能看起来像这样:

typedef void (*instructionp_t)(void);

instructionp_t *read_file(char *name) {

FILE *file;

instructionp_t *code;

instructionp_t *cp;

long size;

char ch;

unsigned int val;

file = fopen(name, "r");

if(file == NULL) exit(1);

fseek(file, 0L, SEEK_END);

size = ftell(file);

code = calloc(size, sizeof(instructionp_t));

if(code == NULL) exit(1);

cp = code;

fseek(file, 0L, SEEK_SET);

while ( ( ch = fgetc(file) ) != EOF )

{

switch (ch) {

case ADD: *cp++ = &add; break;

case MUL: *cp++ = &mul; break;

case PUSH:

*cp++ = &pushi;

ch = fgetc(file);

val = 0;

while (ch--) { val = val * 256 + fgetc(file); }

*cp++ = (instructionp_t) val;

break;

}

}

*cp = &stop;

fclose(file);

return code;

}正如我们所看到的,我们在加载时做了更多的工作,包括对大于255的整数进行解码。(是的,我知道,以上代码对于非常大的整数是不安全的。)

如此,解码和分派循环的VM变得相当简单:

int run() {

sp = 0;

running = 1;

while (running) (*ip++)();

return pop();

}然后我们只需要实现这些指令:

void add() { int x,y; x = pop(); y = pop(); push(x + y); }

void mul() { int x,y; x = pop(); y = pop(); push(x * y); }

void pushi(){ int x; x = (int)*ip++; push(x); }

void stop() { running = 0; }在 BEAM 中,这个概念更进一步,BEAM使用直接线程代码(directly threaded code 有时也被称为 thread code )。在直接线程代码中,调用和返回序列被直接跳转到下一条指令的实现所取代。为了在 C 语言中实现这一点,BEAM 使用了 GCC "labels as values" 扩展。

稍后我们将进一步研究 BEAM 模拟器,但我们将快速了解 add 指令是如何实现的。由于大量使用宏,代码有些难以理解。这个 STORE_ARITH_RESULT 宏实际上隐藏了一个看起来像:I += 4; Goto(*I); 的分派函数。

#define OpCase(OpCode) lb_##OpCode

#define Goto(Rel) goto *(Rel)

...

OpCase(i_plus_jId):

{

Eterm result;

if (is_both_small(tmp_arg1, tmp_arg2)) {

Sint i = signed_val(tmp_arg1) + signed_val(tmp_arg2);

ASSERT(MY_IS_SSMALL(i) == IS_SSMALL(i));

if (MY_IS_SSMALL(i)) {

result = make_small(i);

STORE_ARITH_RESULT(result);

}

}

arith_func = ARITH_FUNC(mixed_plus);

goto do_big_arith2;

}为了让我们更容易理解 BEAM 分派器是如何实现的,让我们举一个更形象的例子。我们将从一些真正的 external BEAM 代码开始,然后我会发明一些 internal BEAM 指令,并用 C 实现它们。

如果我们从 Erlang 中一个简单的 add 函数开始:

add(A,B) -> id(A) + id(B).编译为 BEAM 码后如下:

{function, add, 2, 2}.

{label,1}.

{func_info,{atom,add},{atom,add},2}.

{label,2}.

{allocate,1,2}.

{move,{x,1},{y,0}}.

{call,1,{f,4}}.

{move,{x,0},{x,1}}.

{move,{y,0},{x,0}}.

{move,{x,1},{y,0}}.

{call,1,{f,4}}.

{gc_bif,'+',{f,0},1,[{y,0},{x,0}],{x,0}}.

{deallocate,1}.

return.(完整代码见 Appendix C 中的 add.erl 和 add.S。)

现在,如果我们聚焦这段代码中函数调用的三条指令:

{move,{x,0},{x,1}}.

{move,{y,0},{x,0}}.

{move,{x,1},{y,0}}.这段代码首先将函数调用 (x0) 的返回值保存在一个新的寄存器 (x1) 中。然后,它将调用者保存寄存器 (y0) 移动到第一个参数寄存器 (x0)。最后,它将 x1 中保存的值移动到调用者保存寄存器 (y0) ,以便在下一个函数调用时依旧存活。

假设我们要在 BEAM 中实现三条指令 move_xx, move_yx, 和 move_xy ( 这些指令在 BEAM 中不存在,我们只是用它们来演示这个例子):

#define OpCase(OpCode) lb_##OpCode

#define Goto(Rel) goto *((void *)Rel)

#define Arg(N) (Eterm *) I[(N)+1]

OpCase(move_xx):

{

x(Arg(1)) = x(Arg(0));

I += 3;

Goto(*I);

}

OpCase(move_yx): {

x(Arg(1)) = y(Arg(0));

I += 3;

Goto(*I);

}

OpCase(move_xy): {

y(Arg(1)) = x(Arg(0));

I += 3;

Goto(*I);

}注意,goto * 中的星号并不意味着解引用,该表达式意味着跳转到地址指针,我们实际上应该将其写为 goto*。

现在假设这些指令的编译后的 C 代码最终被加载在内存地址 0x3000、0x3100 和 0x3200中。当 BEAM 码被加载时,三个移动指令中的代码将被执行指令的内存地址所取代。假设代码 ({move,{x,0},{x,1}}, {move,{y,0},{x,0}}, {move,{x,1},{y,0}}) 被加载到地址 0x1000:

/ 0x1000: 0x3000 -> 0x3000: OpCase(move_xx): x(Arg(1)) = x(Arg(0))

{move,{x,0},{x,1}} { 0x1004: 0x0 I += 3;

\ 0x1008: 0x1 Goto(*I);

/ 0x100c: 0x3100

{move,{y,0},{x,0}} { 0x1010: 0x0

\ 0x1014: 0x0

/ 0x1018: 0x3200

{move,{x,1},{y,0}} { 0x101c: 0x1

\ 0x1020: 0x0

地址 0x1000 处的一个"字"指向 move_xx 指令的实现。如果寄存器 I 包含指向 0x1000 的指令指针,那么分派器将会去获取 *I( 即 0x3000 ) 并跳转到那个地址。 (goto* *I)

在 Chapter 7 中,我们将更深入地研究一些真实的 BEAM 指令以及它们是如何实现的。

5.3. 调度:非抢占,规约值计数

大多数现代多线程操作系统使用抢占式调度。这意味着操作系统决定何时从一个进程切换到另一个进程,而不管进程在做什么。这可以保护其他进程不受某个进程行为不当(例如:没有及时做出让步)的影响。

在使用非抢占式调度器的协作多任务中,运行的进程决定何时让步。这样做的好处是,让步过程可以在已知状态下完成。

例如,在像 Erlang 这样具有动态内存管理和类型标记值的语言中,实现可能被设计成只有在工作内存中没有 ”解除标记 ( untagged )“ 值时进程才会产生进程调度让步。

以 add 指令为例,要添加两个 Erlang 整数,仿真器首先必须解除对整数的标记(译注:值的类型标记被记录在变量所占内存中,要取得整数值,需要先把标签去除),然后将它们相加,然后将结果标记为(译注:增加标签)整数。如果使用了完全抢占式的调度程序,则无法保证在未标记整数时进程不会挂起。或者进程在堆上创建元组时被挂起,只剩下半个元组。这将使遍历挂起的进程堆栈和堆变得非常困难。

在语言级别上,所有进程都是并发运行的,程序员不应该处理显式的调度让步。BEAM 通过跟踪进程运行了多长时间来解决这个问题。这是通过计算规约值来实现的。这个术语最初来自于微积分中使用的数学术语:lambda 演算中使用的 beta-reduction。

BEAM 中规约值的定义并不是很明确,但我们可以把它看作是一小块工作,不会花太长时间 ( too long )。每个函数调用都被视为一次规约计数。BEAM 在进入每个函数时都要做一个测试,以检查进程是否耗尽了所有的规约值。如果有剩余的规约值,函数将被执行,否则进程将被挂起。

由于 Erlang 中没有循环,只有尾部递归函数调用,所以很难编写一个不消耗掉规约计数而完成大量工作的程序。

|

有些 BIFs 只使用 1 个规约计数就可以运行很长时间,比如 term_to_binary 和 binary_to_term。请确保调用这些BIFs时,只使用小项式或 binary,否则可能会将调度器锁定很长一段时间。 另外,如果您编写自己的 NIFs,请确保它们能够产生让步,并与运行时间成比例地使规约值减少。 |

我们将在 Chapter 11 中详细介绍调度器的工作方式。

5.4. 内存管理:垃圾收集

Erlang 支持垃圾回收;作为 Erlang 程序员,您不需要执行显式内存管理。在 BEAM 层面,代码负责检查栈和堆溢出,并在栈和堆上分配足够的空间。

BEAM 指令 test_heap 将确保堆上有足够的空间满足需求。如果需要,该指令将调用垃圾收集器来回收堆上的空间。垃圾收集器将依次调用内存子系统的更底层实现来根据需要分配或释放内存。我们将在 Chapter 12 中详细介绍内存管理和垃圾收集。

5.5. BEAM: 一个虚拟机

BEAM 是一个虚拟机,也就是说它是用软件而不是硬件实现的。已经有项目通过 FPGA 实现 BEAM,同样也没有什么可以阻止任何人在硬件上实现 BEAM。一个更好的描述可能是称 BEAM 为一个抽象的机器,并把它看作可以执行 BEAM 代码的机器的蓝图。事实上,BEAM 中的 "AM" 两个字母就代表 “抽象机器”。

在本书中,我们将不区分抽象机器,虚拟机或它们的实现。在更正式的设定中,抽象机器是计算机的理论模型,虚拟机是抽象机器的软件实现,或者是真实物理机器的软件仿真器。

不幸的是,目前还没有关于 BEAM 的官方规范,它目前仅由 Erlang/OTP 中的实现定义。如果您想实现您自己的 BEAM,您就必须尝试模拟当前的实现,而不知道哪些部分是必要的,哪些部分是偶然的。你必须模仿每一个可观察的行为,以确保你有一个有效的 BEAM 解释器。

6. 模块和 BEAM 文件格式

6.1. 模块

| TODO 什么是模块 如何加载代码 热代码加载是如何工作的 净化(purging)是如何工作的 代码服务器(code server) 是如何工作的 动态代码加载如何工作,代码搜索路径 在分布式系统中处理代码。(与第10章重叠,要看什么去哪里。) 参数化模块 p-mod是如何实现的 p-mod调用的技巧 |

以下是本手稿的一段摘录:

6.2. BEAM 文件格式

关于 beam 文件格式的确切信息来源显然是 beam_lib.erl (参见 https://github.com/erlang/otp/blob/maint/lib/stdlib/src/beam_lib.erl)。实际上,还有一份由Beam的主要开发人员和维护人员编写的关于该格式的描述(参见 http://www.erlang.se/~bjorn/beam_file_format.html),可读性更好,但有些过时。

BEAM 文件格式基于交换文件格式 (interchange file format, EA IFF)#,有两个小的变化。我们将这些不久。IFF文件以文件头开始,后面跟着许多“块”。在IFF规范中有许多主要处理图像和音乐的标准块类型。但是IFF标准也允许您指定自己的命名块,而这正是 BEAM 所做的。

注意:Beam文件与标准IFF文件不同,因为每个块是在4字节边界 (即32位字) 上对齐的,而不是在IFF标准中在2字节边界上对齐的。为了表明这不是一个标准的 IFF 文件,IFF 头被标记为 “FOR1” 而不是 “FOR”。IFF 规范建议将此标记用于未来的扩展。

Beam 使用的 form type 值为:“Beam”。一个 Beam 文件头有以下布局:

BEAMHeader = <<

IffHeader:4/unit:8 = "FOR1",

Size:32/big, // big endian, how many more bytes are there

FormType:4/unit:8 = "BEAM"

>>在文件头之后可以找到多个块。每个块的大小与4字节的倍数对齐,并且(每个块)都有自己的块头部 (见下面描述)。

注意:对齐对于某些平台很重要,在这些平台中,对于未对齐的内存字节访问将产生一个硬件异常(在Linux中称为SIGBUS)。这可能导致性能下降,或者异常可能导致VM崩溃。

BEAMChunk = <<

ChunkName:4/unit:8, // "Code", "Atom", "StrT", "LitT", ...

ChunkSize:32/big,

ChunkData:ChunkSize/unit:8, // data format is defined by ChunkName

Padding4:0..3/unit:8

>>该文件格式在所有区域前加上这个区域的大小,使得在从磁盘读取文件时可以很容易地直接解析文件。为了说明beam文件的结构和内容,我们将编写一个程序,它能从一个 beam 文件中提取所有数据块。为了使这个程序尽可能简单和可读,我们不会在读取时解析文件,而是将整个文件作为二进制文件加载到内存中,然后解析每个块。第一步是得到所有块的列表:

-module(beamfile).

-export([read/1]).

read(Filename) ->

{ok, File} = file:read_file(Filename),

<<"FOR1",

Size:32/integer,

"BEAM",

Chunks/binary>> = File,

{Size, read_chunks(Chunks, [])}.

read_chunks(<<N,A,M,E, Size:32/integer, Tail/binary>>, Acc) ->

%% Align each chunk on even 4 bytes

ChunkLength = align_by_four(Size),

<<Chunk:ChunkLength/binary, Rest/binary>> = Tail,

read_chunks(Rest, [{[N,A,M,E], Size, Chunk}|Acc]);

read_chunks(<<>>, Acc) -> lists:reverse(Acc).

align_by_four(N) -> (4 * ((N+3) div 4)).一次样例运行结果可能是这样的:

> beamfile:read("beamfile.beam").

{848,

[{"Atom",103,

<<0,0,0,14,4,102,111,114,49,4,114,101,97,100,4,102,105,

108,101,9,114,101,97,...>>},

{"Code",341,